In spring 2020, the COVID-19 pandemic led to restrictions on private and working lives worldwide. As facilities and universities remained closed, many researchers could not conduct studies as initially planned, which mainly affected laboratory studies and qualitative research designed for face-to-face settings (see also ; ). However, the strict limitations also proved fruitful from a methodological point of view, as new online solutions were developed for already familiar methods and new possibilities to conduct user-centered research in remote settings were explored. In order to enable qualitative studies to be conducted under social distancing policies, several remote methods and their (dis-)advantages are discussed in the literature (e.g., ; ; ; ), also from an ethical point of view (; ). This research shows that remote qualitative research designs do not require completely new approaches and ethical standards, but that adjustments are necessary and need to be reflected. Especially for more complex multi-method designs, moving a study online requires more than just switching to chat applications or videoconferencing systems.

Like many other researchers, we had to shift a study intended for a face-to-face setting to be conducted remotely due to COVID-19 restrictions. We extended the established method of self-confrontation interviews (; ) to investigate perceptions of different forms of hate speech and developed an approach that we term the remote self-confrontation interview method. The qualitative multi-method design combines direct observations, self-confrontation interviews, semi-structured qualitative interviews, and problem-focused tasks, all of which are performed in a remote setting. We discuss the basic idea of the approach and how it can be utilized to study online users’ perceptions of digital media content, drawing on our experiences with the mentioned study in which we successfully piloted the method.

We start by introducing the differentiation between first- and second-level perceptions of digital media content and how these perceptions have been investigated previously. We then detail our implementation of the remote self-confrontation interview method, including study preparation, data collection, and analysis. Finally, we illustrate the challenges and advantages of the approach for both participants and researchers. Our experiences show that the remote self-confrontation interview method is an effective, resource-saving, and broadly applicable approach to study online users’ reactions and perceptions of digital media content, allowing researchers to gain deep insights—even from a distance.

Investigating First- and Second-Level Perceptions of Digital Media Content

In media reception and effects research, market research and user experience studies, understanding how—and first and foremost: whether—digital media content is perceived is crucial. Advertisers need to know if a digital advertisement is recognized and, if so, how it is received by potential customers (e.g., ). Similarly, communication researchers are interested in which parts of a news website are looked at and how this influences learning (e.g., ) or which posts within a social media feed attract attention (e.g., ). All these research questions are united by their focus on (more or less implicit) perceptions of online users. However, the concept of perception is vast and includes different levels from the initial (non-)attention to digital media content to evaluations and reactions resulting from more in-depth considerations.

Building on , we suggest to differentiate between two stages of perception, which are central for understanding users’ engagement with digital media content: first- and second-level perceptions. First-level perceptions refer to a situation in which “users decide to slow down, or even stop scrolling through their newsfeed, to look more carefully at a specific post, based on message cues that are immediately visible” (, p. 1223). Despite a lack of overall knowledge and research about this first contact with digital media content—be it in a social media feed or on a website, capturing this initial attention is essential for various research questions. After this first stage, second-level perceptions can occur, which describe users’ more (time-)intensive engagement with the encountered media content, as signified by close reading or viewing. Investigating these perceptions allows insights into subsequent evaluations and users’ feelings, attitudes, and opinions regarding the content (see also ).

First- and second-level perceptions provide an economical representation of cognitions and thought processes that (can) take place among digital media users. While rather “simple” reasons for engaging with and evaluating digital media content (i.e., second-level perceptions) can be investigated with quantitative surveys or experiments (e.g., ; ), exploring first-level perceptions is more challenging. Quantitative survey methods alone lack information about individual motivations and perceptions, but (retrospective) qualitative interview methods also have limitations, especially when it comes to implicit and fine-grained perceptions such as orienting responses or attentional shifts. To gain deep insights into first- and second-level perceptions, we argue to combine different methodological approaches to benefit from their individual strengths.

First, to better capture individual cognitions and mental processes during a digital media usage situation—while reading an online newspaper article, scrolling through a social media feed, or viewing an online advertisement—think-aloud and thought-list procedures seem to be particularly suitable (). As implemented in previous research (; ; ; ), approaches in which participants received a type of digital stimulus in a controlled setting and subsequently discussed it (or recordings of the usage situation) together with the researcher proved to be fruitful. Ideally, such stimulated self-reports should be supplemented by observations of participants’ behavior during the usage situation to adequately capture the subjective meaning associated with users’ first- and second-level perceptions.

Given the ever more digital research landscape, increasing interest in the use of digital media content, and external conditions such as social contact restrictions, there is a need for renewing and adapting existing multi-method approaches for investigating users’ perceptions in remote settings. We now introduce the remote self-confrontation interview method—an approach that combines advantages from previous offline multi-method designs for studying online users’ behaviors and perceptions and can be conducted completely remotely.

The Remote Self-Confrontation Interview Method

Basic Idea and Methodological Overview

The remote self-confrontation interview method is an adaption and extension of the self-confrontation interview method (; ; ), which was originally developed and used in psychotherapy and psychology (; ). Several disciplines use similar approaches, called, for example, explicitation interviewing (), cued recall (), stimulated recall (), or subjective evidence-based ethnography (). They all rely on “confronting a person with his or her own image, behavior, or experience by means of an artifact; that is, proposing a representation of reality of the person” (, p. 847). Traditionally, this has been done with video recordings of participants performing a given task, such as teachers holding their lessons (), or with first-person perspective videos that participants recorded themselves, for example, while working () or using smartphones (). However, the artifact used to investigate the perceptions, thoughts, and feelings people had while performing a behavior can also be a picture, a screen capture recording, or any other kind of stimulus that aids participants in reliving the experience of interest.

The self-confrontation interview method involves within-method triangulation by combining systematic observations, retrospective think-aloud protocols/stimulated recall approaches, and (qualitative) interview techniques (; ; ). Thus, it allows integrating manifest (observable) behavior and associated cognitions, enabling researchers to gain comprehensive insights into participants’ motives and perceptions.

In general, a self-confrontation interview consists of at least four steps (see ; ): (1) asking participants to perform the behavior of interest; (2) observing/making a recording of the behavior; (3) showing the recording to participants and asking questions about “thoughts and feelings during the act” (i.e., the “core” self-confrontation interview, , p. 144); and (4) transcription and integration of interview and observational data. Usually, the self-confrontation happens immediately after the behavior was performed—because once the usage situation is stored in long-term memory, it ceases to be a “recall or a direct report of the experience but rather reflection or a combination of experience and other related memories” (, p. 206). Compared to concurrent think-aloud protocols, self-confrontation interviews are less distracting for participants because they relieve them of providing a running commentary, which also allows for more automatic actions or self-regulated behaviors to be captured.

Although our approach is very close to the original method, there are some peculiarities that arise directly from the most important one: the remote setting. Instead of performing the study face to face, as it was done previously (; ), the remote self-confrontation interview method relies on videoconferencing systems (e.g., Zoom) that need to have the following features: (1) enabling real-time audiovisual communication between researcher and participant, (2) screen-sharing functions, and (3) securely recording sessions without reliance on third-party software. All these features are necessary to obtain the data needed to adequately investigate users’ first- and second-level perceptions of digital media content and to assist the researcher in subsequent analytical steps.

Another peculiarity concerns the artifact used for the self-confrontation. In comparison to “regular” self-confrontation interviews (and similar approaches), our adaption is focused on the investigation of users’ perceptions of digital media content, which is why participants are not confronted with a full recording of the performed task (e.g., of their browsing session), but only with the stimulus material used (e.g., a social media feed). The advantage of this adaption is that participants are not distracted by their own image in the video, but compelled to focus on the content. Without having to pause the recording several times, the researcher also does not disrupt participants’ think-aloud sessions. It further allows the researcher to direct the conversation more specifically to the experiences that are central to the research interest and to stimulus elements that the participant did not recognize. Moreover, this reduces waiting times resulting from having to convert and review the entire recording of the videoconferencing session.

Last, in addition to “regular” self-confrontations, our approach routinely integrates additional (qualitative) interviews and, if required for the research question, further tasks connected to participants’ perceptions of the stimulus material. This makes it possible to inquire about relevant personal characteristics and traits of the participants, to explore further contextual conditions of the identified perceptions, and thus to add even more depth to the data.

In the following, we will discuss the procedures related to study preparation, data collection, and data analysis in more detail. After explaining the implementation of each step in general, we briefly illustrate them with experiences from our project on users’ perceptions of hate speech in social media environments.

Procedure of Study Preparation

Prior to the observation/interview session, participants receive a link to take part in the study, for example via Zoom, as well as the consent form for their participation. All participants must agree that audio and video recordings of the interview session will be made, as these are crucial for data analysis. Participants should, however, be able to blur their video background, if they prefer to keep their personal surroundings private (). The interview length is individualized and varies based on participants’ engagement with the stimulus material. Nevertheless, based on testing sessions, participants should be given a certain time frame in advance to schedule their meetings and avoid being in a hurry. Having participants know in advance that they will be viewing a digital stimulus and sharing their screen is also helpful to clarify technical issues and to ensure that the required software is working. For this purpose, it is advisable to send instructions regarding the used software and features in advance. Another important aspect is that participants should be prepared to share their screens, which includes closing any other programs or tabs to protect their privacy (). Care should be taken to ensure that only the opened stimulus material will be shared. Depending on the specific research interest, this stimulus material can be fictitious (e.g., [interactive] mock-up social media platforms) or real (e.g., users’ own social media feeds, or news websites). Regardless of the content, the material should be of appropriate length because a highly time-consuming task is likely to demotivate participants from engaging and paying attention.

In our study on hate speech perceptions, we created a functional (but fictitious) social media feed and integrated various forms of hate speech that differed regarding the targeted groups, its presentation form, and directness. To make the feed appear as realistic as possible, the platform’s design and the non-hateful posts were based on content found on existing social media platforms, with the feed being integrated into a scrollable website.

Procedure of Data Collection

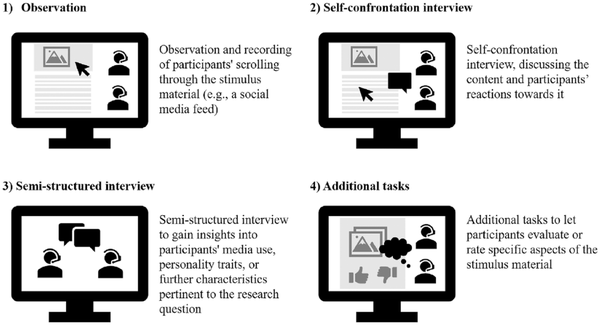

After the participants have given their consent to participation and an appointment has been made, the data collection takes place remotely via videoconferencing software such as Zoom. Each session includes four interrelated steps that we will describe in more detail below (see also Figure 1).

Figure 1

Steps of the remote self-confrontation interview method.

Step 1: Observation of Participants’ Interaction with the Stimulus Material

The first step represents participants’ first contact with the stimulus material while being observed by the researcher. In this step, the researcher asks the participants to view the stimulus material at their own “normal” pace. The task given to participants and the extent to which they should know beforehand about the actual research questions should be adequately discussed, both considering possible effects on participants’ behavior/perceptions and necessary ethical considerations. To keep the usage situation within the given setting as natural as possible, no time limit should be given. Furthermore, no conversation between researcher and participants takes place during this first step, to avoid distractions resulting from the need to answer questions right away or sharing thoughts simultaneously to browsing the stimulus (; ). The interviewer may think about pausing their own camera during this part to help participants feel less watched. While viewing the stimulus material, participants share their screens to allow for observations by the researcher. At the same time, they are visible via their video transmission, which means that both their verbal and nonverbal reactions and their interaction with the stimulus material can be observed and recorded. Accordingly, the observation includes nonverbal responses and social cues that are commonly available in face-to-face interviews (), such as participants’ facial expressions (e.g., frowning, head shaking, or smirking). Moreover, participants’ behaviors within the stimulus (e.g., newsfeed) can be investigated, for example, their dwell time, interaction, and intensity of engagement with specific parts of the content.

The kind of observation used in the remote self-confrontation interview method can be described as open (participants know that they are being observed), direct (researcher is present during the observation), artificial (participants are given a task as part of the observation), and passive-participatory, with the researcher being part of the usage situation, but not interfering with it (see also ). In order to be able to better deal with what has been observed in subsequent steps, it is helpful for the researcher to note down reactions/behaviors to the research interest in an observation protocol. This first step thus resembles remote usability evaluations of digital tools and apps, where participants are invited to test and try out digital designs regarding their user experience (; ).

In our study, to create a plausible scenario for the task, we told the participants that they are part of a pilot test of a new social media platform and that it is their job to do an initial evaluation. To brace them for possibly harsh content, they were told that the platform is free of content moderation and restrictions. However, they were unaware that the study (solely) focused on their perceptions of the integrated hate speech content. Given the sensitive nature of the stimulus material, we decided not to pause the interviewer’s video sharing at any time. This allowed the participants to be aware that they were not alone while dealing with the difficult content and that they could voice any discomfort or request the interviewer’s support if necessary.

Step 2: Self-Confrontation Interview

In the second step, participants are first asked to describe how they have perceived the stimulus material and what specific content they remember, in order to capture their first conscious reactions toward the content. This initial questioning enables gathering an almost unfiltered evaluation, without constraining the participant to focus on specific aspects. After that, the stimulus material is brought back up, this time with the researcher sharing their screen. This setting allows the researcher to scroll through the feed independently with a focus on the content relevant for the research question. During this scrolling, the participants are asked to recapitulate and talk about their perceptions of the stimulus material, both unprompted and prompted by the researcher. This re-confrontation with the material and the articulation of one’s previous reactions and perceptions strongly resembles retrospective forms of think-aloud protocols (; ) or stimulated recall approaches (; ). With this, it is particularly suited to capture first- and second-level perceptions as well as participants’ cognitions and thought processes (). Especially the combination of participants’ self-confrontation with the researcher’s observation of the first step provides a unique depth of information, allowing “insight not only into reflected and self-monitored interpretations of one’s own influence but also into influences of which one is not aware and which become visible in the course of action monitored by the researcher from outside (observation) and by the participant from inside (self-confrontation)” (, p. 187). The main challenge for the researcher in this step is to address the observed peculiarities without influencing participants’ narrative flow too much. Similar approaches using (online) concurrent or retrospective think-aloud methods are widely used for researching usability and design questions as well (; ; ), lately, for example, concerning COVID-19-related visualizations (; ), or healthcare behavior ().

In our study, participants were re-confronted with the social media feed they had just viewed and recapitulated their usage situation by sharing the perceptions, thoughts, and feelings they had while browsing.

Step 3: Semi-Structured Qualitative Interview

After completion of the self-confrontation interview, a qualitative semi-structured interview follows as already implemented in similar offline concepts (). With the help of an interview guide, further personal characteristics and participants’ attitudes and behaviors that are relevant to the research question can be investigated. The clear separation between self-confrontation interview and qualitative interview is beneficial for both researcher and participants, as it allows a clear distinction to be made between stimulus-related responses and more general information about the individual. By gathering extra context in this step, the researcher can properly interpret participants’ behavior instead of just making assumptions based on what was previously observed.

In our study, we obtained additional information that helped us to understand and contextualize participants’ behaviors such as their experiences with and their awareness toward online hate. For example, one participant shared that she had personally experienced hate speech in the past year, which is why she avoided engaging with similar content out of self-protection—a behavior that we had already noticed during the observation phase (Step 1).

Step 4: Additional Tasks

Finally, additional problem-focused tasks can be used to gather even more detailed evaluations of the stimulus material. However, these tasks should be connected to previous parts of the study and adequately integrated to not interrupt the flow of the session. In this step, it can be particularly helpful to take advantage of the technical possibilities offered by the remote implementation, for example by integrating interactive tools or questionnaires. These additions allow for more in-depth examination of central parts of the stimulus as well as direct comparisons of participants performing the same task.

In our study, participants were asked whether they would report selected hateful posts in an interactive setup (i.e., rating posts with thumbs up or thumbs down).

Procedure of Debriefing

Following the completion of the interview, a debriefing should take place, providing the participants with information about the research aims and necessary deceptions (e.g., regarding a fictitious stimulus). In the case of sensitive research topics, it is also essential to consider participants’ well-being. Therefore, the closing of the conversation should, for one, round off the study situation well and, for another, not leave the participants feel uninformed, insecure, or left alone with their thoughts (). Therefore, participants should be given sufficient time to share further thoughts on the study and to address any needs resulting from their participation.

After approximately an hour of discussing hate speech—a potentially stressful topic for many people—we did to not simply “cut off” the conversation, but aimed to make every participant feel well-informed and comfortable. Due to the emotionality of the topic, we provided them with additional information about hate speech, links to support websites, and invited them to contact us at any time should further issues or questions arise.

Procedure of Data Analysis

The multimodality of the remote self-confrontation interview method leaves the researcher with different types of data, which can be combined during post-processing and analyzed together for a more comprehensive understanding. First, the interviews are to be transcribed with the help of the audio recordings, for which (platform-integrated) automatic transcription tools can be useful. It is up to the researcher to decide whether a verbatim or a somewhat cleaned version will suffice. For the parts of the self-confrontation interview that integrate retrospective think-aloud protocols, however, it is advisable to transcribe participants’ exact wording in order to be able to depict the cognitions surrounding their perceptions as accurately as possible. If the transcription is done automatically, the researcher must ensure that everything is properly documented and that no—for the software ostensibly irrelevant—details get lost.

In addition to these transcripts (textual data), the video recordings (audiovisual data) provide valuable additional data that can/should be used to enrich the transcripts. The videos not only allow to measure how much time participants spent on certain parts of the stimulus, but also how they reacted to it (nonverbally), or which parts they did not attend to. Especially participants’ facial expressions (e.g., smiling, frowning) give valuable insights into their perceptions and evaluations and should be added to the transcript. The protocols from the observation phase come in handy at this step as well, as they help to find the moments that have been identified as striking/relevant during the immediate observation. However, the recordings also make it possible to analyze behaviors that may have gone unnoticed during the direct observation.

In the context of our study, for example, we had a close look at which of the relevant posts (containing hate speech) were quickly scrolled over and which were considered more closely. We added this information as well as notes on participants’ nonverbal reactions to the final transcript, as illustrated in the following excerpt. Here, a female participant was once more confronted with a post she previously saw within the stimulus:

“Yeah, so I just looked at it [looks pensive] but did I get stuck right away? [reads the post again; frowns] Yeah, well, I mean, I didn’t scroll any further, so to say [frowns; shakes her head].” (Anette, f, 28).

Her own description of the first contact with the post combined with the researcher’s external observations allow for richer insights and enable analyzing the data without constant consultation of the video recordings.

Finally, the annotated transcripts are compiled and analyzed using content analytical approaches, the complexity and depth of which will depend on the research interest. We recommend using qualitative content analysis according to . This approach relies on selecting the units of analysis (in remote self-confrontation interviews: observed behaviors; statements in the interviews), developing a category system—based both on theoretical assumptions and the actual interview/study material, and coding the data in several cycles. All codings in the categories and subcategories can then be compared within and between participants to assess similarities/differences and identify main themes. In this way, the researcher is able to uncover overarching patterns and factors influencing first- and second-level perceptions. Mayring’s approach offers the advantage of using the technical know-how of quantitative content analysis, while at the same time adhering to the principles of qualitative openness (). Compared to other qualitative analysis strategies, this approach can thus not only cope with a larger amount of material but also enables genuine mixed-methods analyses (i.e., combining quantitative “counting” and qualitative complexity). Analysis programs such as MAXQDA provide several customized tools for this purpose.

Evaluation of the Remote Self-Confrontation Interview Method

Drawing on our own experiences as well as methodological discussions regarding comparable approaches (e.g., ; ; , ; ; ), the following section focuses on the advantages and challenges of the remote self-confrontation interview method, covering both the researchers’ and the participants’ perspectives and provide an outlook on how it might be implemented in future studies. While the method is of course not completely novel, the unique combination of approaches as well as the fully remote implementation shed light on specific benefits and drawbacks.

Advantages and Challenges for Participants

For participants, the remote self-confrontation interview method offers the benefits that remote participation in interviews generally entails. Unlike in the (laboratory) face-to-face situation, participants are at home in their “natural” digital media use environment, making the study feel less artificial. Accordingly, participants’ perceptions are occurring and captured in a situation that is considerably closer to their reality, in a location where they are routinely confronted with similar content. This intimacy can pay off, especially in the case of sensitive and personal topics, as being embedded in a secure and familiar environment makes it easier to speak frankly and openly (; ). Since no travel time and costs emerge when participating remotely, participation hurdles are much lower as well, resulting in a broader range of potential participants that do not have to be in the same location as the researcher. Participants may even be less time-conscious because they are already at home, resulting in more intense interactions than in offline settings (). Our own study also showed that “the fun factor” and the added value for the participants should not be neglected. Participants told us several times how much they liked the interactivity and variety of the study setup. This even went so far that some inquired whether they would be allowed to participate in potential follow-up studies. Creative and interactive components are especially beneficial in remote settings, where participants may find it more difficult to stay focused.

However, the remote implementation also poses challenges that affect participants directly or indirectly. First of all, they need to be at least somewhat tech savvy in order to (1) be able to participate in a video conference and (2) prevent the study situation from feeling too artificial (see also ). Moreover, as audio and video recordings are made, participants often hold some legitimate concerns regarding their privacy and the protection of their personal data, which must be strictly ensured by the researchers. As already discussed, ethical and emotional considerations have to be made in order to make the remote participation as comfortable and secure as possible—especially when it comes to sensitive topics. While it can be advantageous for participants to be in their regular environment when participating remotely, the physical distance between them and the researcher can also lead to difficulties. When talking about personal topics, some participants might need the physical presence of the researcher in order to feel safe (). However, there are positions acknowledging that a sense of closeness can also be experienced remotely “through a conversational transaction to share one’s thoughts and feelings with an empathic listener without physical proximity” (, p. 3). In any case, it seems crucial to balance the distance-proximity issues resulting from remote setups and keep participants’ individual preferences in mind.

Advantages and Challenges for Researchers

At first sight, the remote implementation seems to make things much easier for the researcher, as there is no need to have access to adequate premises and hardware/software. The functionality of modern videoconferencing software also facilitates the integration of multimodal stimuli and interactive tools, simultaneously making it comparatively easy to switch between different setups and scenarios. Regarding types and quality of the obtained data, the remote setting allows to observe and record nonverbal facial expressions of participants directly, which—as our study has shown—provides valuable information regarding their first- and second-level perceptions (see also ). Compared to a face-to-face setting, such observations are much more inconspicuous and less invasive during videoconferencing, as participants do not feel like they are being stared at constantly (). Having video recordings of participants’ facial expressions and—at least to a certain extent—of their body movements ultimately adds a lot of extra information to the data of verbal interviews () that has been missing in previous offline studies (). Moreover, the recordings not only enrich and simplify the analysis for the researcher but also allow them to focus more on the participant’s behavior and less on documenting the session.

Nevertheless, the remote implementation of the self-confrontation interview also entails negative aspects for the researcher and the quality of data. Although the observation phase is easier to implement and not as invasive, some visual cues and body language might only be captured in face-to-face settings (). Working remotely can make it difficult to control for distractions such as people entering the room, children or pets demanding attention, or an unstable Internet connection. To minimize these issues, it is important to conduct a thorough briefing and to check equipment and connections in advance. Due to the extensive preparation phase, the focus on individual experiences, and the rather complex data collection and analysis, the remote self-confrontation interview method also naturally limits the number of participants that can be studied. This, however, is well-compensated by the richness of the obtained data.

Conclusion and Perspectives for Future Research

Despite certain challenges associated with remote research setups, the advantages for researchers and participants are numerous and significantly increase the scope of action for both. Future studies should make use of the method’s flexibility by adopting innovative technologies. For instance, interactive and clickable stimuli—such as mock-up social media platforms or websites ()—could provide valuable information about interaction patterns or participatory behaviors. When exact dwell times and gaze patterns are of interest, participants’ interactions could also be assessed by using remote eye-tracking methods (e.g., ). Such technical extensions, however, must be weighed against the actual research focus and available resources. Following , we also argue that the decision whether to conduct qualitative (interview) studies remotely or not should always be guided by the contextual conditions of the research question and the object of study.

In our view, the desired amount of proximity between participants and researcher requires the most considerations. We are convinced that the researcher’s presence is a vital component of qualitative inquiries (), especially when examining sensitive topics. According to our and other researchers’ experiences, rapport is achievable online as well (), but requires the interviewer to be well prepared and trained. Nonetheless, for some research questions, participants must not feel watched and more distance may be needed. Remote self-confrontation interviews offer flexibility in this regard and should be considered in future study designs. Taken together, the remote self-confrontation interview method is an effective, resource-saving, and broadly applicable way to study online users’ perceptions of as well as reactions to digital media content—even beyond times of pandemics.

Declaration of Conflicting Interests The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by the German Federal Ministry of Education and Research (BMBF) under Grant number 13N15340 (KISTRA project).

Ursula Kristin Schmid

https://orcid.org/0000-0002-1892-002X

1. Overviews of adjustments made for qualitative (participatory) research in response to COVID-19 restrictions are provided, for instance, by , , , or .

2. Further information on the study can be found in . The study was reviewed and approved by the ethics committee of the Faculty of the Social Sciences of the first author’s university.

3. Despite studies that piloted think-aloud methods in remote settings (e.g., design walkthroughs: ; , information-seeking exercises: , user experience tests: ; ), retrospective self-confrontation interviews have not yet been conducted in a remote setting.

References

- Alhejaili A., Wharrad H., Windle R. (2022). A pilot study conducting online think aloud qualitative method during social distancing: Benefits and challenges. Healthcare, 10(9), 9. https://doi.org/10.3390/healthcare10091700

- Bailey K. G., Sowder W. T. (1970). Audiotape and videotape self-confrontation in psychotherapy. Psychological Bulletin, 74(2), 127–137. https://doi.org/10.1037/h0029633

- Barnum C. M. (2002). Usability testing and research. Longman.

- Breuer F. (1995). Das Selbstkonfrontations-Interview als Forschungsmethode. In König E., Zedler P. (Eds.), Bilanz qualitativer Forschung. Band II: Methoden (pp. 159–180). Deutscher Studien Verlag.

- Buber R. (2007). Denke-Laut-Protokolle. In Buber R., Holzmüller H. H. (Eds.), Qualitative Marktforschung: Konzepte—Methoden—Analysen (pp. 555–568). Gabler. https://doi.org/10.1007/978-3-8349-9258-1_35

- Calderhead J. (1981). Stimulated recall: A method for research on teaching. British Journal of Educational Psychology, 51(2), 211–217.

- Çay D., Nagel T., Yantaç A. E. (2020). Understanding user experience of COVID-19 maps through remote elicitation interviews. arXiv, arXiv:2009.01465. http://arxiv.org/abs/2009.01465

- Engward H., Goldspink S., Iancu M., Kersey T., Wood A. (2022). Togetherness in separation: Practical considerations for doing remote qualitative interviews ethically. International Journal of Qualitative Methods, 21, 1–9. https://doi.org/10.1177/16094069211073212

- Fan M., Wang Y., Xie Y., Li F. M., Chen C. (2022). Understanding how older adults comprehend COVID-19 interactive visualizations via think-aloud protocol. International Journal of Human–Computer Interaction, 39(8), 1626–1642. https://doi.org/10.1080/10447318.2022.2064609

- Fauquet-Alekhine P., Bauer M. W., Lahlou S. (2021). Introspective interviewing for work activities: Applying subjective digital ethnography in a nuclear industry case study. Cognition, Technology & Work, 23(3), 625–638. https://doi.org/10.1007/s10111-020-00662-9

- Fox-Turnbull W. (2009). Stimulated recall using autophotography—A method for investigating technology education. In Bekker A., Mottier I., de Vries M.J. (Eds.), Proceedings of the PATT-22 Conference. Strengthening the Position of Technology Education in the Curriculum, Delft, The Netherlands, 24–28 August 2009, pp. 204–217.

- Gemignani M. (2011). Between researcher and researched: An introduction to countertransference in qualitative inquiry. Qualitative Inquiry, 17(8), 701–708. https://doi.org/10.1177/1077800411415501

- Gray L. M., Wong-Wylie G., Rempel G. R., Cook K. (2020). Expanding qualitative research interviewing strategies: Zoom video communications. The Qualitative Report, 25(5), 1292–1301. https://doi.org/10.46743/2160-3715/2020.4212

- Gruber M., Eberl J. M., Lind F., Boomgaarden H. G. (2021). Qualitative interviews with irregular migrants in times of COVID-19: Recourse to remote interview techniques as a possible methodological adjustment. Forum Qualitative Sozialforschung, 22(1), 1. https://doi.org/10.17169/fqs-22.1.3563

- Hall J., Gaved M., Sargent J. (2021). Participatory research approaches in times of COVID-19: A narrative literature review. International Journal of Qualitative Methods, 20, 1–15. https://doi.org/10.1177/16094069211010087

- Haßler J., Maurer M., Oschatz C. (2019). What you see is what you know: The influence of involvement and eye movement on online users’ knowledge acquisition. International Journal of Communication, 13, 3739–3763.

- Heitmayer M., Lahlou S. (2021). Why are smartphones disruptive? An empirical study of smartphone use in real-life contexts. Computers in Human Behavior, 116, 106637. https://doi.org/10.1016/j.chb.2020.106637

- Irani E. (2019). The use of videoconferencing for qualitative interviewing: Opportunities, challenges, and considerations. Clinical Nursing Research, 28(1), 3–8. https://doi.org/10.1177/1054773818803170

- Johnson D. R., Scheitle C. P., Ecklund E. H. (2021). Beyond the in-person interview? How interview quality varies across in-person, telephone, and Skype interviews. Social Science Computer Review, 39(6), 1142–1158. https://doi.org/10.1177/0894439319893612

- Kümpel A. S. (2019a). Dynamik im blick: Die qualitative Beobachtung mit post-exposure-walkthrough als Verfahren für die Rekonstruktion individueller navigations- und Selektionshandlungen auf sozialen netzwerkseiten (SNS). In Müller P., Geiss S., Schemer C., Naab T. K., Peter C. (Eds.), Dynamische prozesse der öffentlichen kommunikation. Methodische herausforderungen (pp. 216–238). Herbert von Halem.

- Kümpel A. S. (2019b). The issue takes it all? Incidental news exposure and news engagement on Facebook. Digital Journalism, 7(2), 165–186. https://doi.org/10.1080/21670811.2018.1465831

- Lahlou S. (2011). How can we capture the subject’s perspective? An evidence-based approach for the social scientist. Social Science Information, 50(3–4), 607–655. https://doi.org/10.1177/0539018411411033

- Lim S. (2002). The self-confrontation interview. Towards an enhanced understanding of human factors in web-based interaction for improved website usability. Journal of Electronic Commerce Research, 3(3), 162–173.

- Lobe B., Morgan D., Hoffman K. A. (2020). Qualitative data collection in an era of social distancing. International Journal of Qualitative Methods, 19, 1–8. https://doi.org/10.1177/1609406920937875

- Mastrianni A., Kulp L., Sarcevic A. (2021). Transitioning to remote user-centered design activities in the emergency medical field during a pandemic. In Kitamura Y. (Ed.), Extended abstracts of the 2021 CHI conference on human factors in computing systems (pp. 1–8). Association for Computing Machinery. https://doi.org/10.1145/3411763.3443444

- Mayring P. (2015). Qualitative inhaltsanalyse: Grundlagen und techniken (12th ed.). Beltz Verlag. https://www.beltz.de/fachmedien/sozialpaedagogik_soziale_arbeit/buecher/produkt_produktdetails/27650-qualitative_inhaltsanalyse.html

- Mayring P., Fenzl T. (2019). Qualitative inhaltsanalyse. In Baur N., Blasius J. (Eds.), Handbuch methoden der empirischen Sozialforschung (pp. 633–648). Springer Fachmedien. https://doi.org/10.1007/978-3-658-21308-4_42

- Messmer R. (2015). Stimulated recall als fokussierter Zugang zu handlungs- und Denkprozessen von Lehrpersonen. Forum Qualitative Sozialforschung, 16(1), 160–169. https://doi.org/10.17169/fqs-16.1.2051

- Ohme J., Mothes C. (2020). What affects first- and second-level selective exposure to journalistic news? A social media online experiment. Journalism Studies, 21(9), 1220–1242. https://doi.org/10.1080/1461670X.2020.1735490

- Oltmann S. (2016). Qualitative interviews: A methodological discussion of the interviewer and respondent contexts. Forum Qualitative Sozialforschung, 17(2), 15. https://doi.org/10.17169/fqs-17.2.2551

- Omodei M. M., McLennan J., Wearing A. J. (2005). How expertise is applied in real-world dynamic environments: Head-mounted video and cued recall as a methodology for studying routines of decision making. In Betsch T., Haberstroh S. (Eds.), The routines of decision making (pp. 271–288). Lawrence Erlbaum Associates Publishers.

- Opdenakker R. (2006). Advantages and disadvantages of four interview techniques in qualitative research. Forum Qualitative Sozialforschung, 7(4). https://doi.org/10.17169/fqs-7.4.175

- Papoutsaki A., Laskey J., Huang J. (2017). SearchGazer: Webcam eye tracking for remote studies of web search. In Proceedings of the 2017 conference on conference human information interaction and retrieval, Oslo, Norway, 7–11 March 2017. ACM, pp. 17–26. https://doi.org/10.1145/3020165.3020170

- Rahman S. A., Tuckerman L., Vorley T., Gherhes C. (2021). Resilient research in the field: Insights and lessons from adapting qualitative research projects during the COVID-19 pandemic. International Journal of Qualitative Methods, 20. https://doi.org/10.1177/16094069211016106

- Rieger D., Bartz F., Bente G. (2014). Reintegrating the ad. Effects of context congruency banner advertising in hybrid media. Journal of Media Psychology, 27(2), 64–77. https://doi.org/10.1027/1864-1105/a000131

- Rieger D., Wulf T., Riesmeyer C., Ruf L. (2022). Interactive decision-making in entertainment movies: A mixed-methods approach. Psychology of Popular Media, 12(3), 294–302. https://doi.org/10.1037/ppm0000402

- Rix G., Lièvre P. (2010). Self-confrontation method. In Mills A. J., Durepos G., Wiebe E. (Eds.), Encyclopedia of case study research (Vol. 2, pp. 847–849). Sage. https://doi.org/10.4135/9781412957397

- Schmid U. K., Kümpel A. S., Rieger D. (2024). How social media users perceive different forms of online hate speech: A qualitative multi-method study. New Media & Society, 26(5), 2614–2632. https://doi.org/10.1177/14614448221091185

- Shapiro M. A. (1994). Think-aloud and thought-list procedures in investigating mental processes. In Lang A. (Ed.), Measuring psychological responses to media messages (pp. 1–14). Routledge.

- Simon-Liedtke J. T., Bong W. K., Schulz T., Fuglerud K. S. (2021). Remote evaluation in universal design using video conferencing systems during the COVID-19 pandemic. In Antona M., Stephanidis C. (Eds.), Universal access in human-computer interaction. Design methods and user experience (pp. 116–135). Springer International Publishing. https://doi.org/10.1007/978-3-030-78092-0_8

- Sülflow M., Schäfer S., Winter S. (2019). Selective attention in the news feed: An eye-tracking study on the perception and selection of political news posts on Facebook. New Media & Society, 21(1), 168–190. https://doi.org/10.1177/1461444818791520

- Thunberg S., Arnell L. (2021). Pioneering the use of technologies in qualitative research—A research review of the use of digital interviews. International Journal of Social Research Methodology, 25(6), 757–768. https://doi.org/10.1080/13645579.2021.1935565

- Tremblay S., Castiglione S., Audet L.-A., Desmarais M., Horace M., Peláez S. (2021). Conducting qualitative research to respond to COVID-19 challenges: Reflections for the present and beyond. International Journal of Qualitative Methods, 20, 160940692110096. https://doi.org/10.1177/16094069211009679

- Unkel J. (2021). Measuring selective exposure in mock website experiments: A simple, free, and open-source solution. Communication Methods and Measures, 15(1), 1–16. https://doi.org/10.1080/19312458.2019.1708284

- van den Haak M., De Jong M., Jan Schellens P. (2003). Retrospective versus concurrent think-aloud protocols: Testing the usability of an online library catalogue. Behaviour & Information Technology, 22(5), 339–351. https://doi.org/10.1080/0044929031000

- Vermersch P. (2018). The explicitation interview. https://www.researchgate.net/publication/324976173_The_explicitation_interview

- von Cranach M., Kalbermatten U. (1982). Ordinary interactive action: Theory, methods and some empirical findings. In von Cranach M., Harré R. (Eds.), The analysis of action: Recent theoretical and empirical advances (pp. 115–160). Cambridge University Press.

- Young R. A., Valach L., Dillabough J.-A., Dover C., Matthes G. (1994). Career research from an action perspective: The self-confrontation procedure. The Career Development Quarterly, 43(2), 185–196. https://doi.org/10.1002/j.2161-0045.1994.tb00857.x