1 Introduction

In recent years, the burgeoning field of genomics has been revolutionized by the advent of high-throughput sequencing technologies (), leading to exponential growth in genomic data (). This deluge of data presents a significant challenge for traditional genomic analysis methods, particularly in terms of computational efficiency and storage requirements. Calculating the Average Nucleotide Identity (ANI) similarity of genome files is the key step for various downstream workloads in genome analysis, such as large-scale database search (), clustering (), and taxonomy analysis (). Traditional BLAST-based methods (, ) rely on base-level alignment to perform accurate ANI calculations. However, the alignment process is computationally expensive and requires hours or days to calculate ANIs. The slow speed of alignment-based approaches has become a major bottleneck for large-scale genome analysis.

Several state-of-the-art works have tried to speed up large-scale genome analysis by approximating the genome similarity using more efficient data structures. These works can be categorized into two types: mapping-based and sketch-based approaches as follows. FastANI () and Skani () are two representative mapping-based algorithms that leverage k-mer-based alignment for ANI estimation. FastANI is built upon the Mashmap sequence mapping algorithm () and achieves a significant speedup compared to the alignment-based baseline (). Skani uses the sparse chaining to increase the sensitivity of the mapping, further improving accuracy and efficiency of ANI estimation. However, both FastANI and Skani suffer from high memory consumption. For example, Skani needs to store indexing files with a storage size comparable to the original dataset. FastANI encounters out-of-memory issues on large datasets as reported in ().

In this work, we focus on the “genome sketching,” which is regarded as a promising solution to address the aforementioned challenges because it significantly reduces storage size while providing satisfactory accuracy of estimation (). Unlike alignment-based or mapping-based tools (, , , ) that require expensive computation or large memory space, sketch-based approaches (, , , ) only preserve the most essential features of the genome (called the “sketch”). The sketch’s compact representation enables rapid and efficient ANI approximation for genome files. Mash () and Sourmash () represent groundbreaking efforts to use MinHash () and FracMinHash (, ) to estimate genomic similarity, respectively. Bindash () improves the accuracy of ANI estimation over Mash by adopting the one-permutation rolling MinHash with optimal densification (). Dashing 2 () utilizes the SetSketch data structure () and incorporates multiplicities to produce memory-efficient genome sketches and accurate estimation of ANI.

1.1 Motivation

By transforming raw genome data into more compact data structures, genome sketching represents a paradigm shift in bioinformatics, paving the way for more scalable and rapid genomic analyses in the era of big data. Recent studies on hyperdimensional computing (HDC) have demonstrated the effectiveness of using HDC to accelerate bioinformatics workloads, such as pattern matching (, , , ) and spectral clustering ().

1.1.1 Limitations of existing HDC/SimHash-related search algorithms

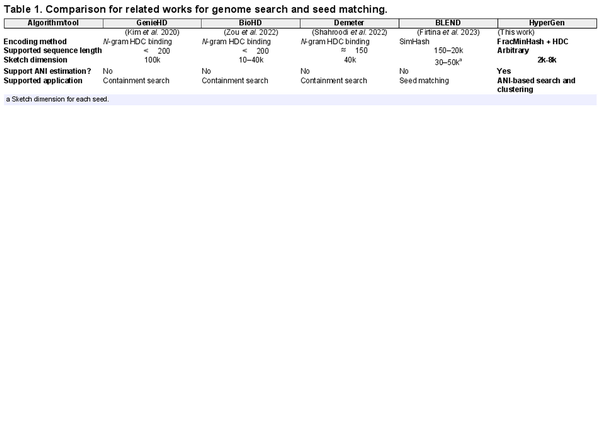

Table 1 summarizes the key features of state-of-the-art tools that utilize HDC or SimHash algorithms. GenieHD (), BioHD (), and Demeter () are three representative HDC-based tools. Due to the limitation of N-gram binding-based encoding, existing HDC tools for genome search only supports short genomes sequences with length . However, they require very large sketch HV dimension (10k to 100k) to achieve good accuracy, which degradates the overall efficiency. The N-gram binding-based encoding shows high computational complexity. In comparison, HyperGen adopts a more efficiency encoding method that combines FracMinHash and HDC aggregation.

Meanwhile, existing HDC-based tools do not support ANI estimation and ANI-based search. They can only check the containment of given query. These drawbacks limit their downstream applications. The other related work is BLEND () that uses SimHash to encode genome seeds. The difference includes: (i) HyperGen and BLEND are for different tasks. BLEND is used for seed matching while HyperGen is for more general-purpose ANI estimation and database search; (ii) Compared to HyperGen, BLEND uses much smaller sketch dimension for each seed.

1.1.2 Opportunities and limitations of DotHash

Recent DotHash () shows superior space and computational efficiency for the Jaccard similarity estimation. DotHash leverages the HDC-based random indexing (, ) and is originally designed for fast set intersection estimation. The main difference between DotHash and MinHash lies in the format of generated sketch: MinHash represents a sketch as a hash set with discrete values, while DotHash represents a sketch with a nonbinary vector of high dimension. DotHash’s vector representation of the sketch achieves faster processing speed since it can fully exploit the low-level hardware parallelism [such as CPU’s Single Instruction Multiple Data (SIMD) and GPU] optimized for vector processing.

However, DotHash still suffers from two major limitations that hinder its application to genome sketching. First, DotHash is only applicable to non-genome data since it lacks an effective k-mer sampling strategy to generate genomic sketches. Second, DotHash uses high-precision floating point numbers to represent random vectors, exhibiting large runtime overhead and slow speed. Our goal in this work is using HDC (, ) to achieve better tradeoffs between ANI estimation accuracy, runtime performance, and memory efficiency over previous sketch-based tools (, , ).

1.2 Contributions

In this work, we propose HyperGen, a novel tool for efficient genome sketching and ANI estimation. HyperGen exploits the emerging HDC [similar to DotHash ()] to boost genomic ANI calculation. Specifically, we optimize DotHash’s efficiency by converting the sketch generation process into a low bit-width integer domain. This allows us to represent the genome sketch using the high-dimensional vector (HV) at the cost of negligible runtime overhead. Based on the HV sketch, we propose an approach to estimate the Jaccard similarity using vector matrix multiplication. We also introduce a lossless compression scheme using bit-packing to further reduce the sketch size.

We benchmark HyperGen against several state-of-the-art tools (, , , ). For ANI estimation, HyperGen demonstrates comparable or lower ANI estimation errors compared to other baselines across different datasets. For generated sketch size, HyperGen achieves to sketch size reduction as compared to Mash () and Dashing 2 (), respectively. HyperGen also enjoys the benefits of the modern hardware architecture optimized for vector processing. HyperGen shows about sketch generation speedup over Mash and up to search speedup over Dashing 2. To the best of our knowledge, HyperGen offers the optimal trade-off between speed, accuracy, and memory efficiency for ANI estimation.

2 Materials and methods

2.1 Preliminaries

Fast computation of Average Nucleotide Identity (ANI) is pivotal in genomic data analysis (microbial genomics to delineate species), as ANI serves as a standardized and genome-wide measure of similarity that helps facilitate genomic data analysis. Popular approaches to calculate ANI include: alignment (, ), mapping (, ), and sketch (, , , ). However, base-level alignment-based and k-mer -level mapping-based methods involve either time-consuming pairwise alignments or memory-intensive mappings. In the following sections, we focus on the sketch-based ANI estimation with significantly better efficiency.

2.1.1 MinHash and Jaccard similarity

Existing sketh-based approaches (, , , ) do not directly compute ANI. Instead, they compute the Jaccard similarity (), which is used to measure the similarity of two given k-mer sets. Then the Jaccard similarity is converted to ANI as shown in Equation (8). The conversion between Jaccard similarity and ANI is computationally trivial, so most efforts in previous works (, , , ) are to find more efficient and accurate ways to estimate Jaccard similarity.

Without loss of generality, we denote k-mer as consecutive substrings with length k of the nucleotide alphabet, e.g. . denotes the set of k-mers sampled from genome sequence X based on a given condition. HyperGen uses k-mer’s hash to represent for better efficiency. Therefore, the Jaccard similarity for two sequences, A and B, can be computed as follows: where is the Jaccard similarity indicating the overlap between k-mer sets of two sequences. Note that HyperGen uses canonical k-mers by default.

A straightforward idea to sample k-mer sets in Equation (1) is to keep all k-mers. However, this incurs prohibitive complexity since all unique k-mers need to be stored. The resulting complexity is for a sequence of length L. To alleviate the complexity issue, Mash () and its variants (, ) use MinHash () to approximate the Jaccard similarity by only preserving a tiny subset of k-mers. In particular, Mash keeps N k-mers that have the smallest hash values . In this case, the Jaccard similarity is estimated as:

Here, using MinHash helps to reduce the sketch complexity from to a constant . The sampled k-mer set that stores N smallest k-mer hash values is regarded as the genome file sketch required for ANI estimation.

2.1.2 Jaccard similarity using DotHash

A recent work () demonstrates that the speed and memory efficiency of Jaccard similarity approximation can be improved by using the DotHash based on Random Indexing (). The key step to compute Jaccard similarity in Equation (1) is computing the cardinality of set intersection while the cardinality of set union can be calculated through .

In DotHash, each element of the set is mapped to a unique D-dimensional vector in real number using the mapping function . Each set is expressed as an aggregation vector such that where the aggregation vector sums all the elements’ vectors generated by the mapping function . One necessary constraint for function is: the generated vectors should satisfy the quasi-orthogonal properties:

The quasi-orthogonal property in Equation (4) can be visualized in Supplementary Fig. S1. DotHash () uses a pseudo random number generator (RNG) as the mapping function because the RNG can generate uniform and quasi-orthogonal vectors in an efficient manner.

Using the quasi-orthogonal properties, the cardinality approximation for set intersection is transformed into the dot product of two aggregation vectors: where those vectors not in the set intersection ( ) have no contribution to the inner product due to their quasi-orthogonality as in Equation (4). DotHash effectively aggregates all elements in a set to form an aggregation vector with D dimension. The space and computational complexity of set cardinality estimation is . Moreover, the computation process of DotHash is highly vectorized and can be easily boosted by existing hardware architecture optimized for general matrix multiply (GEMM).

2.2 Proposed HyperGen sketching

The aforementioned DotHash provides both good accuracy and runtime performance (). However, we observe two major limitations of DotHash: 1. Although DotHash can be used to calculate the cardinality of set intersection, it cannot be applied to genomic sketching because DotHash lacks a k-mer sampling module that identifies the useful k-mers; 2. The computation and space efficiency can be further optimized because the previous DotHash manages and processes all vectors in floating-point (FP) numbers. The mapping function incurs significantly overhead.

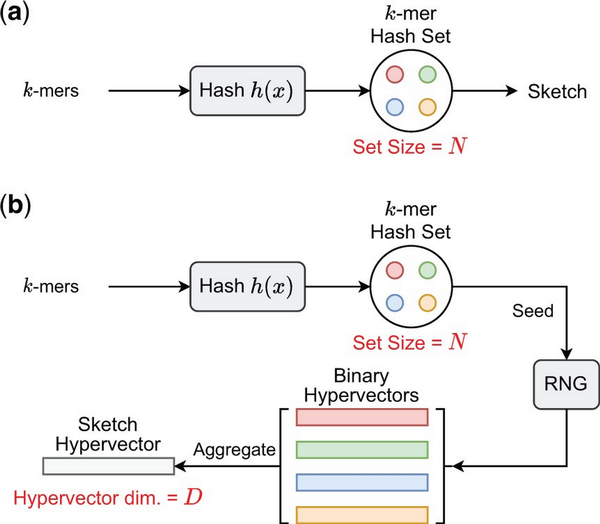

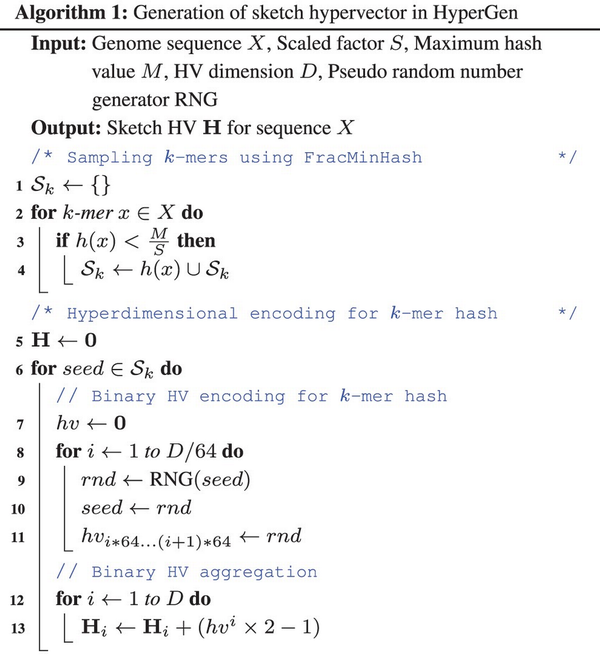

We present HyperGen for genomic sketching applications that addresses the limitations of DotHash. Figure 1 shows the algorithmic overview for (a) Mash-like sketching and (b) HyperGen sketching schemes. The first step of HyperGen is similar to Mash, where both Mash and HyperGen extract k-mers by sliding a window through given genome sequences. The extracted k-mers are uniformly hashed into the corresponding numerical values by a hash function h(x). To ensure low memory complexity, most k-mer hashes are filtered and only a small portion of them are preserved in the k-mer hash set to work as the sketch (or signature) of the associative genome sequence. The key difference is that HyperGen adds a key step, called Hyperdimensional Encoding for k-mer Hash, to convert k-mer hash values into binary hypervectors (HVs) and aggregate to form the D-dimensional sketch HV. To distinguish itself from DotHash, the random vector in HyperGen is named HV. Algorithm 1 summarizes the flow of generating sketch hypervector in HyperGen. In the following sections, we explain the details of HyperGen.

Algorithmic overview for (a) Mash-like sketching, and (b) HyperGen sketching for genome sequences. Mash stores the genome sketch in a k-mer hash set with complexity while HyperGen aggregates N k-mer hashes into a D-dimensional sketch HV with complexity.

2.2.1 Step 1: k-mer hashing and sampling

Mash uses MinHash that keeps the smallest N hash values as the genome sketch. In comparison, HyperGen adopts a different k-mer hashing and sampling scheme. Specifically, HyperGen performs a sparse k-mer sampling using FracMinHash (, ) (instead of MinHash in Mash). Given a hash function that maps k-mers into the corresponding nonnegative integer, the sampled k-mer hash set is expressed as Line 2–4 in Algorithm 1: where M is the maximum hash value while S denotes the scaled factor that determines the density of sampled k-mers in the set. FracMinHash has been widely adopted in other tools, such as Sourmash () and Skani (), due to its excellent performance. The advantage of using FracMinHash over MinHash () is that it ensures an unbiased estimation of the Jaccard similarity of k-mer sets with very dissimilar sizes (), providing better approximation quality than MinHash and its variants (, ). However, FracMinHash usually produces a larger hash set compared to Mash (), requiring more memory space. Step 2 in HyperGen alleviates the increased memory issue.

2.2.2 Step 2: hyperdimensional encoding for k-mer hash

In Fig. 1a, after the k-mer hashing and sampling process, Mash-like sketching algorithms (such as Mash (), Sourmash (), and Mash Screen ()) directly use the sampled k-mer hash set as the sketch to compute the Jaccard similarity for given sequences.

In Fig. 1b, HyperGen adds an additional step, called Hyperdimensional Encoding for k-mer Hash (Line 5–13 in Algorithm 1), before the sketch is generated. This step essentially converts the discrete and numerical hashes in the k-mer hash set to a D-dimensional and nonbinary vector, called sketch hypervector. The hypervector dimension D is normally large (1024–8192) to ensure good accuracy. In particular, each hash value in the k-mer hash set is uniquely mapped to the associated binary HV hv as Line 6–11 of Algorithm 1. HyperGen relied on recursive random bit generation to produce binary HVs of arbitrary length: the k-mer hash value is set as the initial seed of the pseudo function. For each iterative step, a 64 b random integer rnd is generated using seed. The generated integer rnd is not only assigned to the corresponding bits in hv, but is also set as the next seed.

The hash function that maps the k-mer hash value to the binary HV hv is the key component of HyperGen because it determines the speed and quality of genome sketch generation. The following factors should be considered when selecting a good function: (i) The function needs to be fast enough to reduce the additional overhead for sketch generation. (ii) The generated random binary HVs need to be able to provide enough randomness (i.e. the binary HVs are as orthogonal as possible). This is because binary HVs are essentially random binary bit streams that need to be nearly orthogonal to each other to satisfy the quasi-orthogonal requirements. (iii) The sketches results should be reproducible (i.e. the identical bit streams can be generated using the same seed). We adopt a fast and high-quality pseudo RNG (https://github.com/wangyi-fudan/wyhash) in Rust language (), which passes two randomness tests: TestU01 and Practrand (). In this case, we can use the pseudo RNG to stably generate high-quality and reproducible binary HVs.

Figure 2 shows an example of generating the sketch HVs with dimension D =8 for two genome sequences based on k-mer size k =3 and k-mer hash set size N =4. Each sampled k-mer hash value in the hash set is converted to the corresponding binary HV using the function RNG . Then, all N binary HVs are aggregated into a single sketch HV based on the following point-wise vector addition: where the binary HV is first converted to . hvi denotes the i-th binary HV in the set. Then all binary HVs in the set are aggregated together to create the corresponding sketch HV. Compared to Mash-liked sketching approaches (, , ), HyperGen is more memory efficient because the sketch HV format is more compact with space complexity, which is independent of the k-mer hash set size N. Meanwhile, HyperGen’s hyperdimensional encoding step helps to achieve better ANI similarity estimation quality (see Section 3).

Figure 2

Sketch hypervector generation and set intersection computation in HyperGen. Each k-mer with size k =3 first passes through a hash function h(x). The k-mers (A = AGACTT and B = AGACTC) are hashed to hash set. Then each k-mer hash value is converted into the associated orthogonal binary HV. The set intersection between two k-mer hash sets is computed using Equation (11).

2.2.3 Step 3: ANI estimation using sketch hypervector

The generated sketch hypervector can be used to efficiently estimate the ANI similarity. HyperGen estimates ANI value using the same approach in (). The ANI under the Poisson distribution is estimated as: where denotes the Jaccard similarity between genome sequence A and sequence B while k is the k-mer size.

Therefore, ANI estimation in HyperGen becomes calculating Jaccard similarity based on sketch HVs. Equation (1) shows that the intersection size and the set size of two k-mer hash sets are the keys to calculating the Jaccard similarity. For , the cardinality of a set is computed as follows: which shows the set cardinality can be computed based on the L2 norm of sketch HV. The computation of set intersection in HyperGen is similar to DotHash ()’s Equation (5) because HVs in HyperGen share the same quasi-orthogonal properties as DotHash. Then, Equation (5) becomes:

With Equation (9) and Equation (10), HyperGen first estimates the following Jaccard similarity using the derived sketch HVs:

Then ANI in Equation (8) can be easily calculated.

2.3 Software implementation and optimization

HyperGen is developed using the Rust language, and the code is available at https://github.com/wh-xu/Hyper-Gen. We present the following optimizations to improve the speed and efficiency of HyperGen.

2.3.1 Sketch quantization and compression

Although the sketch HV has a compact data format with high memory efficiency, there still exists data redundancy in sketch HVs that can be utilized for further sketch compression. Our experimental observation is that the value range of sketch HVs is distributed within a bell curve (see Supplementary Fig. S2). Rather than store the full-precision sketch hypervector (e.g. INT32), we perform lossless compression by quantizing the HV to a lower bit width. The quantized bits are concatenated together using bit-packing.

2.3.2 Fast HV aggregation using SIMD

The inner loop of binary HV aggregation step in Algorithm 1 incurs significant runtime overhead when a large HV dimension D is applied. We develop a parallelized HV aggregation using single instruction, multiple data (SIMD) instruction to reduce the impact of increased HV aggregation time. As shown in Supplementary Fig. S3, the HV aggregation optimized by SIMD only takes negligible portion of the total sketching time.

2.3.3 Parallel sketching

HyperGen provides two sketching modes: 1. normal mode and 2. fast mode. The normal mode sketches genome files on CPU with multithreading. The fast mode offloads genome sketching to GPU with better computing capabilities. The fast mode can be widely supported by commodity GPUs. Our measurement results in Fig. 5 show that HyperGen’s fast mode further improves the sketching speed by to over normal mode.

2.3.4 Pre-computation for HV sketch norm

The L2 norm of each sketch hypervector, , is precomputed during sketch generation phase. The L2 norm value is stored along with the sketch hypervector to reduce redundant computations for the ANI calculation phase.

3 Evaluation and results

3.1 Evaluation methodology

3.1.1 Genome dataset and hardware setting

The evaluation is conducted on a machine with a 16-core Intel i7-11700K CPU with up to 5.0 GHz frequency, 2TB NVMe PCIe 4.0 storage, and 64GB of DDR4 memory. Unless otherwise specified, all programs are allowed to use 16 threads with their default parameters. Five genome datasets in Supplementary Table S2 are adopted for benchmarking. The datasets include: Bacillus cereus, Escherichia coli, NCBI RefSeq (), Parks MAGs (), and GTDB MAGs (). These datasets vary in terms of number of genomes, lengths, and sizes.

3.1.2 Benchmarking tools

We compare HyperGen with five state-of-the-art tools, including Mash (), Bindash (), Sourmash (), Dashing 2 (), FastANI (), Skani (), and ANIm (). Mash, Bindash, Sourmash, and Dashing 2 are sketch-based tools for ANI estimation. In comparison, FastANI and Skani use mapping-based methods while ANIm adopts the most accurate base-level alignment-based method to calculate the ANIs. ANIm results are regarded as the ground truth. Specifically, we use NUCleotide MUMmer () to generate the alignment results and then convert the alignment data into the corresponding ground-truth ANIs. Dashing 2 uses its weighted bagminhash mode. HyperGen (similar to Mash, Bindash, Sourmash, and Dashing 2) is an ANI approximation tool for the high ANI regime. We follow the previous work () and only preserve ANI values > 85. The versions and commands used are summarized in Supplementary Table S1. HyperGen uses k-mer size k =21, scaled factor S =1500 as suggested in previous works (, , ). Our analysis in Section 3.2.1 shows that the HV dimension D =4096 achieves a good balance between ANI estimation error and sketching complexity. So we set it as the default parameter. HyperGen also supports the fast mode which accelerates the sketching process on GPU.

3.1.3 Evaluation metrics

ANI Precision. One of the critical metrics for evaluating the effectiveness of a genome sketching tool is the precision of ANI estimation. We use three metrics to evaluate the ANI approximation errors: 1. mean absolute error (MAE), 2. root mean squared error (RMSE), and 3. mean percentage absolute error (MPAE). We also adopt the Pearson correlation coefficient to assess the linearity of the ANI estimate with respect to ground truth.

Computation and Memory Efficiency. An ideal genome sketching scheme should be able to generate compact sketch files at the cost of short runtime, especially for large-scale genomic analysis. To compare the computation and memory efficiency of evaluated tools, we measure and report the wall-clock runtime and sketch sizes during database search.

3.2 ANI estimation quality

In this section, we study the quality of ANI estimation by performing the following pairwise ANI experiment. First, the largest 100 genome files are collected from each dataset. Then, each batch of 100 genome files is used to calculate the pairwise and symmetric 100 × 100 ANI matrix.

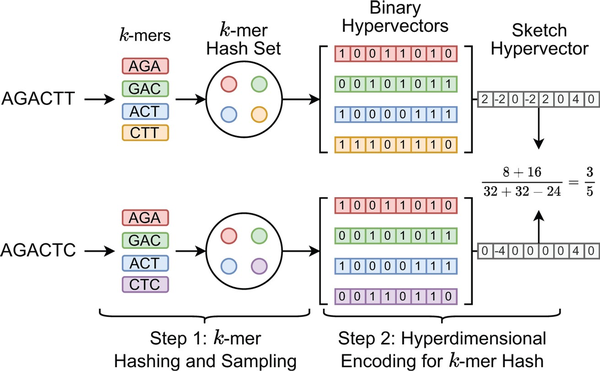

3.2.1 HyperGen ANI quality using different parameters

We first evaluate the impact of HyperGen’s two algorithmic parameters: scaled factor S and HV dimension D on the final ANI estimation errors and linearity. The experimental results are depicted in Fig. 3, where the scaled factor S and the HV dimension D vary from 800 to 2000 and from 256 to 16 384, respectively. It shows that: for all scaled factors, the ANI approximation errors decrease significantly as D increases from 256 to 4096. This is because a larger HV dimension can produce better orthogonality, which is helpful to reduce the approximation error of the set intersection according to the theory in (). But increasing the HV dimension larger than D =4096 does not yield a significant error reduction or linearity improvement.

Figure 3

Error metrics (MAE, RMSE, MPAE) and ANI linearity (Pearson coefficient) as a function of scaled factor S and HV dimension D.

It is also observed that a smaller scaled factor S generally leads to a worse ANI approximation error when using the same HV dimension D. The reason behind this is: a smaller S that produces a larger hash threshold value as in Equation (2), will generate a denser sampling of k-mers. This increases the size of sampled k-mer hash set. As a result, more binary HVs need to be aggregated to the sketch HV. The excessive number of binary HVs degrades the orthogonality between binary HVs, reducing the approximation accuracy for set cardinality. To balance between the quality and complexity of the ANI approximation, we choose S =1500 and D =4096 as the default scaled factor and HV dimension, respectively.

3.2.2 Comparison with other sketching tools

We also compare the quality of the ANI estimation for various tools, including Mash, Bindash, Dashing 2, Sourmash, FastANI and Skani. For fair comparison, the sketch-based tools (HyperGen, Mash, Bindash, Sourmash, and Dashing 2) use the same sketch size. Other parameters are the same as their default parameters. Specifically, HyperGen uses D =4096, while Mash and Dashing 2 use a sketch size of 1024.

HyperGen can be used to estimate the Jaccard index. First we perform Jaccard estimation experiment and compare HyperGen to Mash, Bindash, Dashing 2, and Sourmash. Supplementary Table S3 shows the error metrics with respect to the true Jaccard results. The 100 × 100 Jaccard matrix for Bacillus cereus and Escherichia coli datasets is computed. HyperGen achieves competitive Jaccard estimation accuracy with other baseline tools.

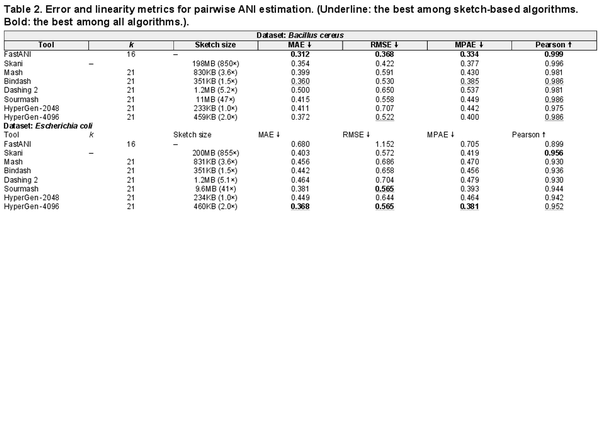

Table 2 summarizes the ANI error and linearity metrics with respect to the ground truth values on Bacillus cereus and Escherichia coli datasets. For the Bacillus cereus dataset, HyperGen is slightly inferior to Bindash, FastANI and Skani, which yields a comparable Pearson correlation coefficient compared to the other sketch-based tools (Mash and Dashing 2). In the Escherichia coli dataset, HyperGen consistently surpasses all other sketch-based tools, providing both lower ANI approximation errors and better linearity. Meanwhile, HyperGen’s sketch size is over 800× smaller than Skani. These experiments demonstrate that HyperGen is capable of delivering a high quality of ANI estimation.

3.3 Genome database search

One critical workload that genome sketching tools can accelerate is the genome database search. Meanwhile, the genome database search can be extended to multiple downstream applications.

3.3.1 ANI linearity and quality

We extensively consider the five evaluated datasets as reference databases. We run FastANI, Skani, Mash, Bindash, Dashing 2, and HyperGen using the commands and queries listed in Supplementary Table S2. Sourmash is not considered because it does not support multi-thread execution. The execution consists of two steps: (i) All tools first generate reference sketches for the target database; (ii) the second step is to search for the query genomes (given in Supplementary Table) against the built reference sketches. Note that FastANI and Skani were unable to complete the database search on the Parks MAGs and GTDB datasets in one shot because it requires more memory than the available 64GB and experienced out of memory issues. We divided FastANI and Skani executions into smaller batches and measured the accumulative runtime.

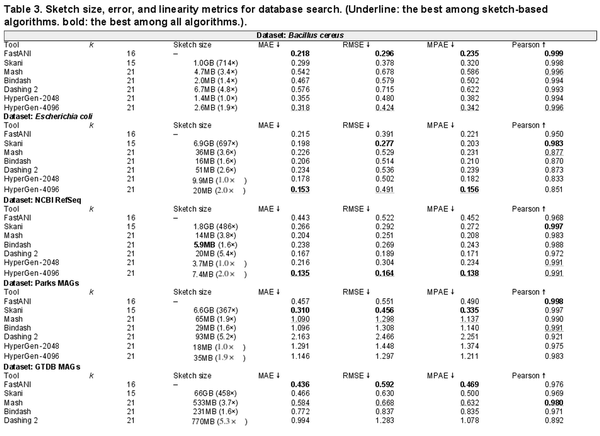

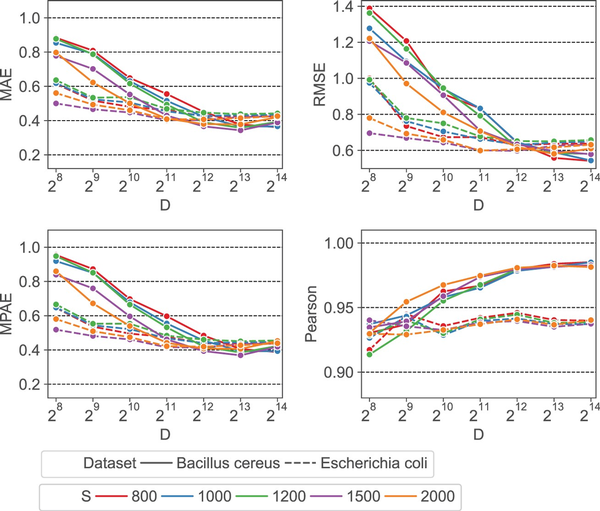

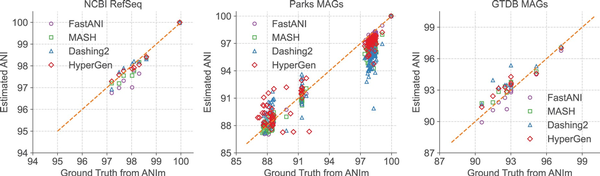

The estimated ANI values generated in Table 3 by each tool in the NCBI RefSeq, Parks MAGs, and GTDB MAGs datasets are depicted in Fig. 4 with their corresponding ground truth values from ANIm. Data points with ANI < 85 are filtered. It shows that HyperGen produces good ANI linearity compared to the ground truth results.

Figure 4

Database search ANI comparison for FastANI, Mash, Dashing 2, HyperGen, and ground-truth ANIm on NCBI RefSeq, Parks MAGs, and GTDB MAGs datasets.

Quantitative results in terms of numerical error and linearity metrics are summarized in Table 3. The ANI error distribution for each tool can be seen in Supplementary Fig. S4. In datasets Bacillus cereus, Escherichia coli, and NCBI RefSeq, HyperGen achieves the lowest ANI errors among all sketch-based tools, delivering more accurate ANI estimations as compared to Mash, Bindash, and Dashing 2. HyperGen still shows competitive accuracy over mapping-based FastANI and Skani. In Escherichia coli and NCBI RefSeq, HyperGen outperforms FastANI and Skani in terms of most error metrics and produces comparable Pearson coefficients. HyperGen is capable of achieving state-of-the-art error and linearity for large-scale genome search. Meanwhile, the required sketch size is two orders of magnitude smaller than Skani.

We study the impact of genome quality on the ANI estimation accuracy. We calculate the BUSCO completeness value () for each reference genome file. As shown in Supplementary Fig. S5, the more incomplete genomes of GTDB MAGs have higher ANI estimation error. Hence, applying HyperGen to incomplete genomes leads to more significant ANI errors.

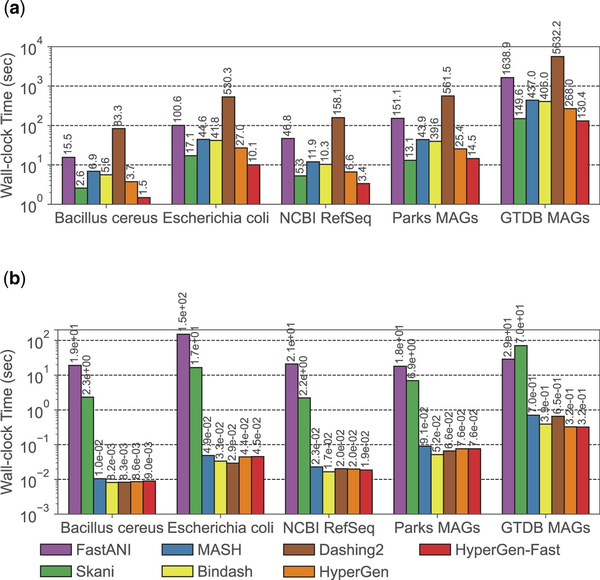

3.3.2 Runtime performance

The wall-clock time spent on two major steps during database search: reference sketch generation and query search, is illustrated in Fig. 5. HyperGen -Fast means using the fast sketching mode on GPU. The reference sketching step is mainly bounded by the sketch generation process, while the search step is bounded by the sketch file loading and ANI calculation. HyperGen without fast mode achieves the 2nd fastest sketching speed, slightly slower than Skani. After enabling fast mode, HyperGen is the fastest sketching tool for most evaluated datasets. The sketching speed of HyperGen is to faster than Bindash. HyperGen is significantly faster ( to ) than the mapping-based FastANI.

Figure 5

Runtime performance comparison for genome search in Table 3. (a) Reference sketching time and (b) query search time.

For query search, HyperGen is also one of the fastest tools. The search speedup of HyperGen over FastANI and Skani is to because FastANI and Skani require slow sequence mapping and large index file loading processes. Moreover, the speedup of HyperGen is more significant for larger datasets. Dashing 2 sketch size is about of HyperGen so it takes more time to load sketch files. The reduced sketch size helps to save sketch loading time. Meanwhile, the HV sketch format of HyperGen allows us to adopt highly vectorized programs to compute ANI with a short processing latency.

3.3.3 Memory efficiency

The file sizes of the reference sketches generated by Mash, Dashing 2, and HyperGen, are listed in Table 3. We apply the Sketch Quantization and Compression technique to HyperGen. As a result, HyperGen consumes the smallest memory space among the three sketch-based tools. The sketch sizes produced by Mash and Dashing 2 are to of HyperGen’s sketch sizes. This suggests that HyperGen is the most space-efficient sketching algorithm. Compared to original datasets with GB sizes, a compression ratio of can be achieved by only processing the sketch files. This enables the large-scale genome search on portable devices with memory constraints. HyperGen’s memory efficiency comes from two factors. First, the Hyperdimensional Encoding for k-mer Hash step converts discrete hash values into continuous high-dimensional sketch HVs, which are more compact than hash values. Second, HyperGen’s Sketch Quantization and Compression provides additional compression through further removing redundant information in sketch HVs.

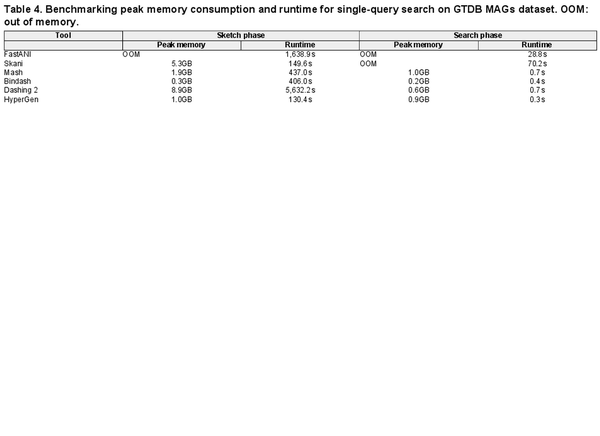

Table 4 summarizes performance metrics in terms of peak memory consumption and runtime for the GTDB MAG dataset search. HyperGen achieve both the fastest sketching and search speed due to the efficient HDC algorithm as well as software optimizations. FastANI and Skani experience OOM (out of memory) issues because they require a large memory space to store intermediate data for sequence mapping. In comparison, HyperGen consumes about 1GB of memory for the sketching or searching phase, significantly lower than FastANI and Skani. This indicates that HyperGen is friendly to run on memory-limited device, such as laptop.

4 Discussion and conclusion

Fast and accurate estimation of Average Nucleotide Identity (ANI) is considered crucial in genomic analysis because ANI is widely adopted as a standardized measure of genome file similarity. In this work, we present HyperGen: a genome sketching tool based on hyperdimensional computing (HDC) (, ) that improves accuracy, runtime performance, and memory efficiency for large-scale genomic analysis. HyperGen inherits the advantages of both FracMinHash -based sketching (, ) and DotHash (). HyperGen first samples the k-mer set using FracMinHash. Then, the discrete k-mer hash set is encoded into the corresponding sketch HV in hyperdimensional space. This allows the genome sketch to be presented in compact vectors without sacrificing accuracy. HyperGen software implemented in Rust language deploys vectorized routines for both sketch and search steps. The evaluation results show that HyperGen offers superior ANI estimation quality over state-of-the-art sketch-based tools (, ). Meanwhile, HyperGen delivers not only the fastest sketch and search speed, but also the highest memory efficiency in terms of the sketch file size.

Future directions of HyperGen include the following aspects:

Further Compression and Faster Large-scale Search: The vector representation of sketch HVs allows us to apply more optimizations on the top of HyperGen. For instance, we can employ lossy vector compression techniques, such as product quantization (, ) and residual quantization (), to reduce sketch size and memory footprint. This is advantageous for achieving rapid genome database search on embedded or mobile devices.

On the other hand, the search step in HyperGen requires intensive GEMM operations to obtain ANI values between genomes. The large-scale database search can be further accelerated using advanced hardware architectures with high data parallelism and optimized interfaces. Previous work () demonstrates that deploying HDC-based bioinformatics analysis on GPU exhibits at least one order of magnitude speedup over CPU.

More genome workloads: HyperGen can be extended to support a wider range of genomic applications. For example, in metagenome analysis, we can utilize HyperGen to perform the containment analysis for genome files such as (). To realize this, the sketch HVs generated by HyperGen can be used to calculate the max-containment index instead of ANI. The ANI estimation error and memory requirements of HyperGen can be reduced by considering the more accurate ANI estimation based on multi-resolution k-mers ().

References

- Baker DN, Langmead B. Dashing: fast and accurate genomic distances with hyperloglog. Genome Biol2019;20:265–12.

- Baker DN, Langmead B. Genomic sketching with multiplicities and locality-sensitive hashing using dashing 2. Genome Res2023;33:1218–27.

- Broder AZ. 1997. On the resemblance and containment of documents. In Proceedings. Compression and Complexity of SEQUENCES 1997 (Cat. No. 97TB100171), pages 21–29. IEEE.

- Brown CT, Irber L. sourmash: a library for minhash sketching of DNA. JOSS2016;1:27.

- Chaumeil P-A, Mussig AJ, Hugenholtz P et al Gtdb-tk v2: memory friendly classification with the genome taxonomy database. Bioinformatics2022;38:5315–6.

- Ertl O. Setsketch: filling the gap between minhash and hyperloglog. Proc VLDB Endow2021;14:2244–57.

- Firtina C, Park J, Alser M et al Blend: a fast, memory-efficient and accurate mechanism to find fuzzy seed matches in genome analysis. NAR Genom Bioinform2023;5:lqad004.

- Guo R, Sun P, Lindgren E et al Accelerating large-scale inference with anisotropic vector quantization. In: International Conference on Machine Learning, 2020. pp. 3887–3896. PMLR.

- Hera MR, Pierce-Ward NT, Koslicki D. Deriving confidence intervals for mutation rates across a wide range of evolutionary distances using fracminhash. Genome Res2023; gr–277651.

- Hernández-Salmerón JE, Irani T, Moreno-Hagelsieb G et al Fast genome-based delimitation of enterobacterales species. PLoS One2023;18:e0291492.

- Irber L, Brooks PT, Reiter T et al Lightweight compositional analysis of metagenomes with fracminhash and minimum metagenome covers. BioRxiv2022;2022–01.

- Jain C, Dilthey A, Koren S et al A fast approximate algorithm for mapping long reads to large reference databases. In International Conference on Research in Computational Molecular Biology, 2017. pp. 66–81. Springer.

- Jain C, Rodriguez-R LM, Phillippy AM et al High throughput ani analysis of 90k prokaryotic genomes reveals clear species boundaries. Nat Commun2018;9:5114.

- Jégou H, Douze M, Schmid C et al Product quantization for nearest neighbor search. IEEE Trans Pattern Anal Mach Intell2011;33:117–28.

- Kanerva P. Hyperdimensional computing: an introduction to computing in distributed representation with high-dimensional random vectors. Cogn Comput2009;1:139–59.

- Kanerva P, Kristoferson J, Holst A. Random indexing of text samples for latent semantic analysis. In: Proceedings of the Annual Meeting of the Cognitive Science Society, volume 22, 2000.

- Kang J, Xu W, Bittremieux W et al Accelerating open modification spectral library searching on tensor core in high-dimensional space. Bioinformatics2023;39:btad404.

- Kim Y et al Geniehd: efficient DNA pattern matching accelerator using hyperdimensional computing. In: 2020 Design, Automation & Test in Europe Conference & Exhibition (DATE), 2020. pp. 115–120.

- Kurtz S, Phillippy A, Delcher AL et al Versatile and open software for comparing large genomes. Genome Biology2004;5:1–9.

- Lee D, Kim C, Kim S et al Autoregressive image generation using residual quantization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022. pp. 11523–11532.

- Lee I, Ouk Kim Y, Park S-C et al Orthoani: an improved algorithm and software for calculating average nucleotide identity. Int J Syst Evol Microbiol2016;66:1100–3.

- Liu S, Koslicki D. Cmash: fast, multi-resolution estimation of k-mer-based jaccard and containment indices. Bioinformatics2022;38:i28–i35.

- Matsakis ND, Klock FS. The rust language. Ada Lett2014;34:103–4.

- Nunes I, Heddes M, Vergés P et al Dothash: estimating set similarity metrics for link prediction and document deduplication. In: Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 2023. pp. 1758–1769.

- Ondov BD, Treangen TJ, Melsted P et al Mash: fast genome and metagenome distance estimation using minhash. Genome Biol2016;17:132–14.

- Ondov BD, Starrett GJ, Sappington A et al Mash screen: high-throughput sequence containment estimation for genome discovery. Genome Biol2019;20:232–13.

- Parks DH, Rinke C, Chuvochina M et al Recovery of nearly 8,000 metagenome-assembled genomes substantially expands the tree of life. Nat Microbiol2017;2:1533–42.

- Parks DH, Chuvochina M, Waite DW et al A standardized bacterial taxonomy based on genome phylogeny substantially revises the tree of life. Nat Biotechnol2018;36:996–1004.

- Parks DH, Chuvochina M, Chaumeil P-A et al A complete domain-to-species taxonomy for bacteria and archaea. Nat Biotechnol2020;38:1079–86.

- Sahlgren M. 2005. An introduction to random indexing. In: Methods and Applications of Semantic Indexing Workshop at the 7th International Conference on Terminology and Knowledge Engineering.

- Shahroodi T, Zahedi M, Firtina C et al Demeter: a fast and energy-efficient food profiler using hyperdimensional computing in memory. IEEE Access2022;10:82493–510.

- Shaw J, Yu YW. Fast and robust metagenomic sequence comparison through sparse chaining with skani. Nat Methods2023;20:1661–5.

- Shrivastava A. 2017. Optimal densification for fast and accurate minwise hashing. In International Conference on Machine Learning, pages 3154–3163. PMLR.

- Simão FA, Waterhouse RM, Ioannidis P et al Busco: assessing genome assembly and annotation completeness with single-copy orthologs. Bioinformatics2015;31:3210–2.

- Sleem L, Couturier R. Testu01 and practrand: tools for a randomness evaluation for famous multimedia ciphers. Multimed Tools Appl2020;79:24075–88.

- Soon WW, Hariharan M, Snyder MP et al High-throughput sequencing for biology and medicine. Mol Syst Biol2013;9:640.

- Stephens ZD, Lee SY, Faghri F et al Big data: astronomical or genomical? PLoS Biol 2015;13:e1002195.

- Xu W, Kang J, Bittremieux W et al Hyperspec: ultrafast mass spectra clustering in hyperdimensional space. J Proteome Res2023;22:1639–48.

- Zhao X. Bindash, software for fast genome distance estimation on a typical personal laptop. Bioinformatics2019;35:671–3.

- Zou Z, Chen H, Poduval P et al Biohd: an efficient genome sequence search platform using hyperdimensional memorization. In: Proceedings of the 49th Annual International Symposium on Computer Architecture, 2022. pp. 656–669.