Introduction

Eruption of canine normally starts at a higher level in the maxilla, thereby having the longest path of eruption. After mandibular or maxillary third molar, maxillary canines are considered the second most commonly impacted teeth with incidence rate of 0.9–2.2%., There are various local, systemic, and genetic factors that attribute to the etiology of maxillary canine. The primary goal in diagnosis and treatment planning of an impacted maxillary canine is to identify whether the canine is impacted and to predict its subsequent eruption and outcome of orthodontic intervention.

Several radiographic aids have been advocated for the diagnosis of maxillary canine impaction. Ericson and Kurol found that early diagnosis could be made with a series of measurements on panoramic film such as the angle between long axis of canine and midline, linear distances from the canine cusp tip to the occlusal plane, and the location of the canine in different sectors with relation to adjacent teeth. Previous literature reported that locating the impacted canine based on sector classification is more accurate and reliable than tooth angulation for predicting impaction.

Computer-aided diagnosis (CAD) has evolved drastically due to the increased availability of digital data, computational ability, and advances in artificial intelligence (AI) technology. AI can be classified as symbolic AI and machine learning. Symbolic AI is a collection of techniques that works on a set of rules, whereas in machine learning, the model learns either by supervised or unsupervised learning from examples and adapts based on the available data. The input data and the correct output data (referred to as labels) should be available before starting the training phase of supervised learning algorithms. The input data are given to the model which then estimates the output values. The output values are then compared with the labels, and the difference between them is referred to as errors. These errors can be minimized by repetitive training and validating the model unless the errors are minimal.

In dentistry, radiographic records and their interpretation have a very important role in diagnosing, treatment planning, and monitoring dental health periodically. Processing of radiographic images has become an area of interest for automation. This automated solution will improve the accuracy in clinical decision-making in routine practice and thereby reduce the stress and fatigue of dentists. In orthodontics, the usefulness of AI has been studied for cephalometric landmark detection, teeth segmentation, and teeth classification. – The purpose behind the use of AI is to assist in making more accurate and unbiased decisions, thus increasing the efficiency of systems.,

According to the available literature evidence, there has been no study that evaluated the diagnostic accuracy of an AI model for predicting the favorability of maxillary canine impaction using orthopantomograms (OPGs). Thus, the aim of this study was to test the accuracy of AI algorithm in predicting the favorability of maxillary canine impaction as compared to the conventional manual tracing method using OPGs.

Materials and Methods

Ethical Approval and Study Design

OPGs of patients with impacted maxillary canine were collected retrospectively from the archives of the department. The sample for the study was selected based on the following criteria.

Inclusion Criteria

Diagnostic OPGs of patients above 13 years of age with unilateral/bilateral impacted maxillary canine.

Patients with buccal/palatal canine impaction.

Patients with retained/exfoliated deciduous maxillary canine with unerupted permanent maxillary canine.

OPGs with good image characteristics.

No gender restrictions.

Exclusion Criteria

Patients who have previously undergone orthodontic treatment.

Patients with craniofacial anomalies associated with/without eruption abnormalities.

Patients with a history of traumatic injury and/or surgery in the head and neck region.

Patients with musculoskeletal or bone disorders.

Measurements

The parameters on OPGs that were used to assess the severity of the canine impaction were selected based on the conclusions of the systematic review by Ravi et al. and they are as follows.

Sector Classification

The position of the impacted maxillary canine was designated to one of the five sectors categorized based on the position of the canine in relation to the midline of the maxillary dentition, long axes of central and lateral incisors, and lines passing through mesial surface of the first premolar. The impaction was decided as unfavorable for spontaneous eruption if the cusp tip of the maxillary canine crossed sector 2.

Angle between the Long Axis of the Maxillary Canine to the Midline,

The midline was determined as line passing from the anterior nasal spine through the interincisal contact of the permanent maxillary central incisors. Angulation exceeding 31° was indicative of unfavorable canine impaction.

Angle between the Long Axis of the Maxillary Canine to the Lateral Incisor

Angulation of the long axis of the maxillary canine exceeding 51.47° relative to the lateral incisor was indicative of unfavorable canine impaction.

Angle between the Long Axis of the Maxillary Canine to the Occlusal Plane

The occlusal plane was constructed as a horizontal line passing through the incisal edge of the permanent maxillary central incisor and the mesiobuccal cusp tip of the first permanent maxillary molar on the given side. The impaction was considered to be unfavorable if the angulation was above 132°.

Perpendicular Distance between the Maxillary Canine Tip to the Occlusal Plane and Midline

It was measured by projecting a perpendicular line from the occlusal plane and midline to the cuspal tip of the impacted maxillary canine, respectively.

Development of AI Model

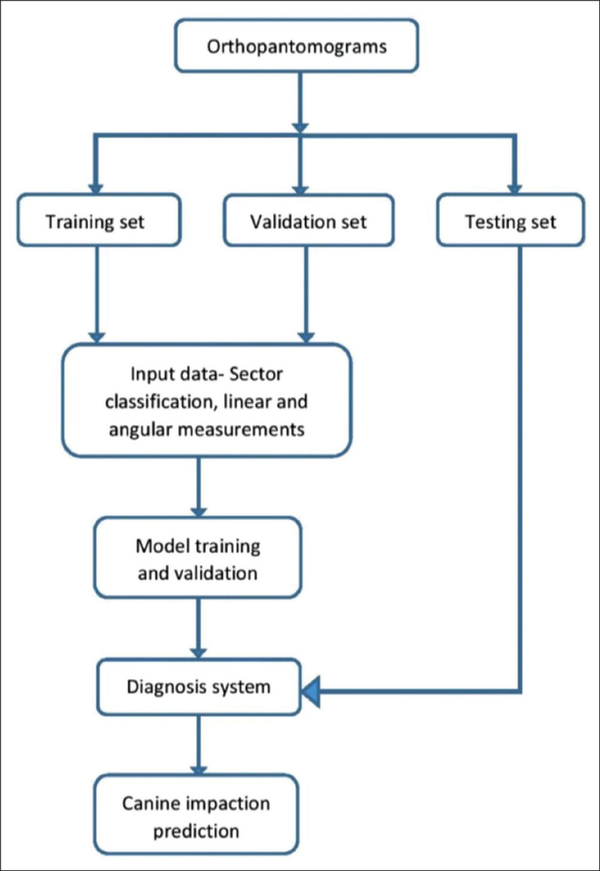

The model developing process was conducted on computer equipment at the Department of Computer Science Engineering. The most advanced convolutional neural network (CNN) model was used in assessing the favorability of maxillary canine impaction. Three layers were used to make up the CNN architecture: a fully connected classification layer and two convolutional pooling layers that follow one another. The two convolutional pooling layers each have 128 and 64 neurons and also utilize the same fixed 8 × 8 convolutional kernel and 2 × 2 pooling kernel. The remaining neurons in the last layer were connected to the fully connected classification layer that determines whether the input image has been classified or not. The proposed model is implemented in MATLAB program (R2022b v 9.13.0. The MathWorks, Inc., USA). In the initial phase, the size of all the photos was reduced to 80 × 215 pixels in order to optimize the data for model training. Then, using the associated landmarks from each training image, the training process was implemented on the whole target dataset. In a nutshell, the deep CNN model was used to build the proposed model in stages. The batch size was 329, the learning rate was 0.01, and there were 150 epochs in the image during the training phase. The trained model recognized the favorability in the test OPGs automatically.

Input Dataset

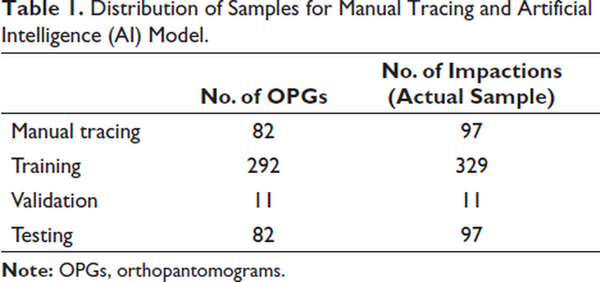

The input dataset for the AI model included OPG images of 437 maxillary canine impactions along with normative values for the sector classification and angular parameters. The images were distributed into:

Training group: 329 images

Validation group: 11 images

Testing group: 97 images

The distribution of samples is depicted in Table 1. The training group was used to train input image for automatic prediction for the favorability of the canine impaction. The validation and testing group was used for the evaluation of the performance of the software.

The overall model building process involving the training, validation, and testing phases is depicted in Figure 1.

Flowchart Depicting Model Building Process.

Training Phase

OPG images along with the normative values for the sector classification and angular parameters were given as an input for the AI model. The coordinates in the training process were as follows:

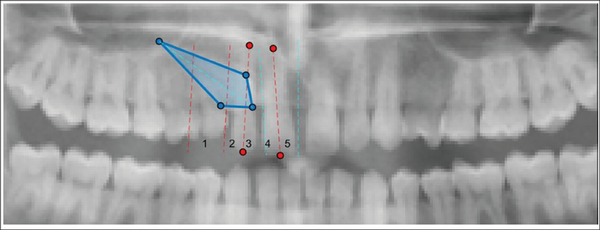

Step 1: The OPGs were uploaded in JPEG/TIFF format and cropped using a bounding box of uniform size of 80 × 215 pixels (Figures 2 and 3).

Point for Cropping Orthopantomogram.

Cropped Image of Orthopantomogram.

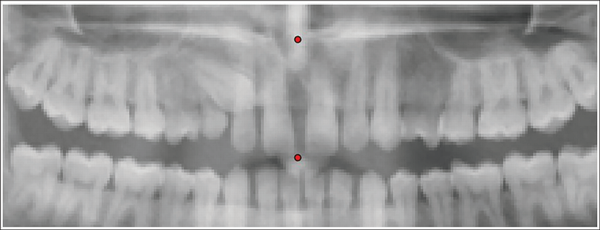

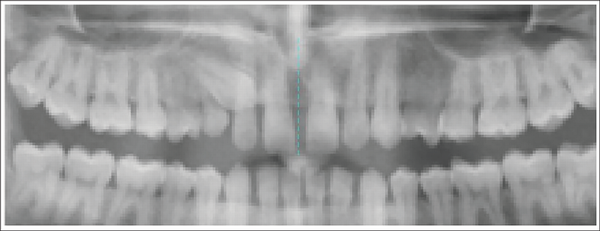

Step 2: Two points (anterior nasal spine and contact point between two maxillary central incisors) were marked to generate the midline (Figures 4 and 5).

Points for Generation of Midline.

Generation of Midline.

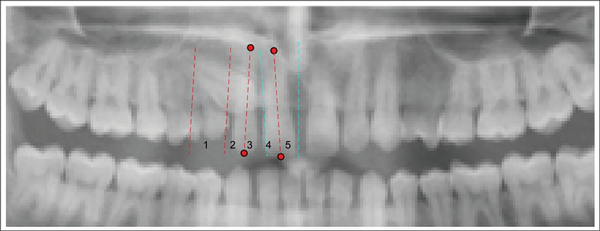

Step 3: Two points (root apex and the midpoint of the incisal edge of incisors) were marked to generate the long axes of central and lateral incisors (Figure 6).

Generation of the Sector Lines.

Step 4: Four points (cusp tip, prominent mesial contour, prominent distal contour, root apex) were marked to generate the outline of the impacted maxillary canine (Figure 7).

Generation of the Canine Outline.

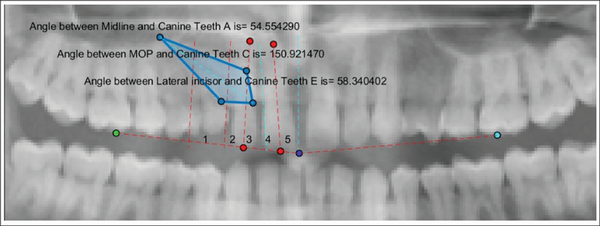

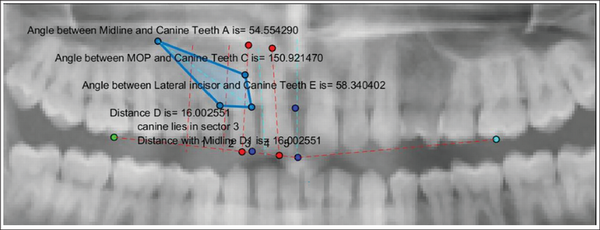

Step 5: Three points (incisal edge of the permanent maxillary central incisor and the mesiobuccal cusp tip of the first permanent maxillary molar on either side of the midline) were marked to generate the occlusal plane (Figure 8).

Generation of Occlusal Plane.

Step 6: A point was marked on the occlusal plane to measure the vertical distance from the cusp tip of the impacted maxillary canine to the occlusal plane (Figure 9).

Final Artificial Intelligence (AI) Prediction of Impacted Maxillary Canine.

Step 7: A point was marked on the midline to measure the horizontal distance from the cusp tip of the impacted maxillary canine to the midline (Figure 9).

The system generated the output values as the angles made by the long axis of the impacted maxillary canine to the midline, occlusal plane, lateral incisor, and the linear distances from the canine cusp tip to occlusal plane and midline. The final layer showed the measurements of all the six parameters which were stored as a single image in PNG format (Figure 8). Based on the measurements, AI model automatically predicted the favorability of maxillary canine impaction, and the final measurements along with the prediction were reported in an Excel format.

Validation Phase

The AI model was validated using 11 randomly selected images. The errors that occurred during the training phase were immediately rectified till the point of minimum error, after which the iterative learning with the training data was stopped.

Testing Phase

A fresh set of 97 impacted maxillary canine images were used for testing. These images were traced manually which forms Group 1 (Manual Tracing) and tested using the developed AI model which forms Group 2 (AI Model). All the abovementioned parameters were evaluated and compared between the two groups.

Manual Tracing of OPGs

The radiographic images of 97 impacted teeth were annotated using acetate tracing paper of 0.003” thickness and a sharp 3H drawing pencil. The outlines along with the long axes of the impacted canine and incisors of that side, midline, and occlusal plane were drawn on the OPGs as mentioned previously. This was followed by the measurement of selected angular and linear parameters using a ruler and protractor.

The angular and linear parameters and sector classification selected for assessment of maxillary canine impaction using OPGs were calculated for all the samples and compared with that of the data obtained from AI algorithm and then subjected to statistical analysis.

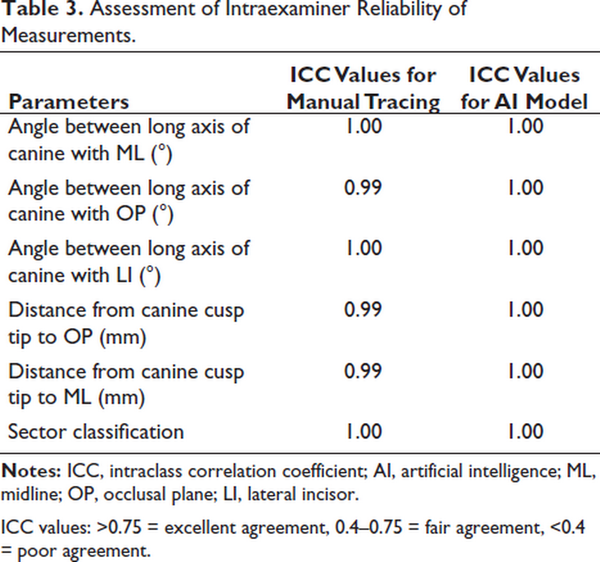

The reproducibility of measurements made with the AI model and manual tracing was evaluated by repeating the measurements on 20 randomly selected samples by the same investigator at an interval of 30 days.

Statistical Analysis

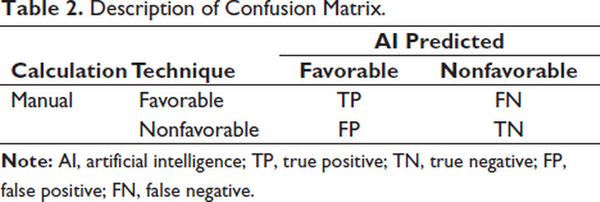

The following statistical analysis was carried out using Statistical Package for the Social Sciences (SPSS), version 16.0 (SPSS Inc., Chicago, IL). The intraexaminer reliability of linear and angular measurements was evaluated with the intraclass correlation coefficient (ICC). Mean and standard deviation were calculated for both manual and AI diagnostic results. To assess the performance of AI for the angular and sector parameters, diagnostic accuracy, sensitivity, specificity, and positive and negative predictive values were calculated by using the following formula through confusion matrices data (Table 2).

where, TP: true positive; TN: true negative; FP: false Positive; FN: False Negative.

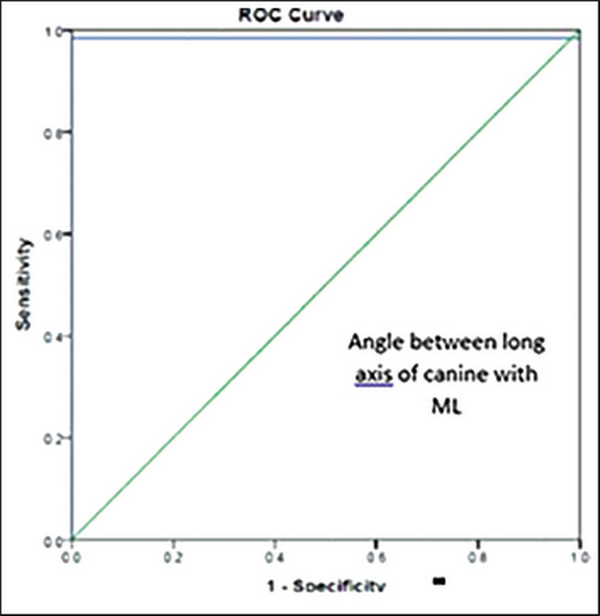

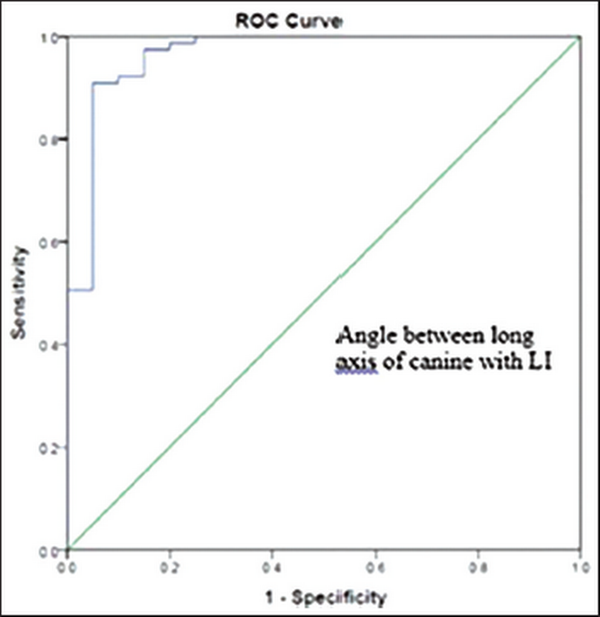

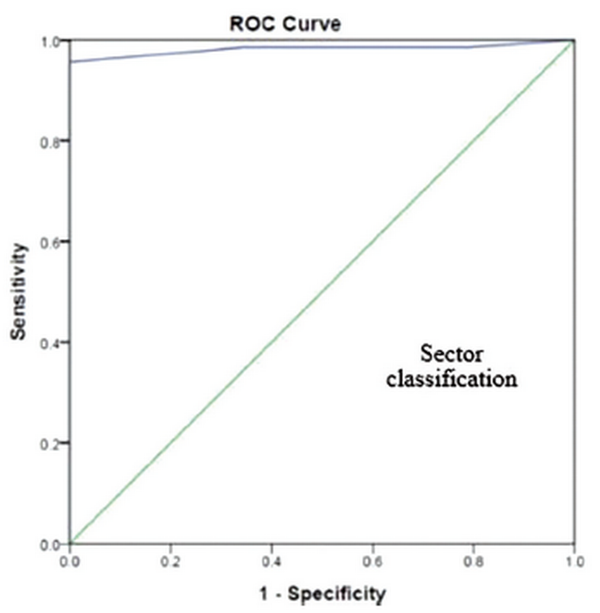

A comparison of mean linear measurements between both groups was performed using paired t-test. The overall diagnostic performance of the AI algorithm was performed using receiver operating characteristic (ROC) curve.

The ROC curves and area under the curve (AUC) with 95% confidence intervals were performed to assess the overall diagnostic performance of the AI algorithm.

Results

The ICC values were above 0.9, indicating excellent intraexaminer reliability of the measurements (Table 3).

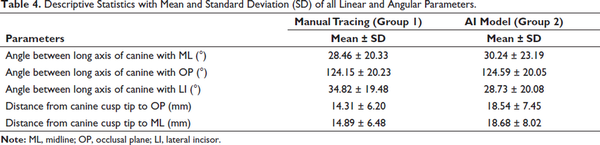

Descriptive statistics with the mean and standard deviation of all the linear and angular parameters are shown in Table 4.

Angular Parameters and Sector Classification

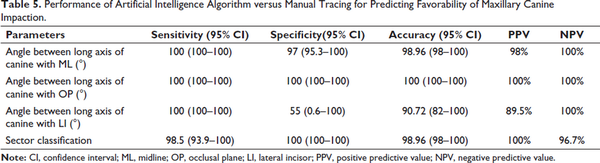

The sensitivity, specificity, accuracy, and positive and negative predictive values for angular parameters and sector classification are provided in Table 5.

Sensitivity

The angle between long axis of canine with midline, occlusal plane, and lateral incisor had the highest sensitivity value of 100%. The sector classification had sensitivity value of 98.5% (93.9–100).

Specificity

The highest specificity values of 100% were found for angle between long axis of canine with occlusal plane and sector classification followed by angle between long axis of canine with midline with a specificity score of 97 (95.3–100). The least specificity value of 55% (0.6–100) was found for the angle between long axis of canine with lateral incisor.

Accuracy

The angle between long axis of canine with the occlusal plane had an accuracy of 100%. The angle between long axis of canine with midline and sector classification had a similar accuracy score of 98.96 (98–100). The angle between long axis of canine with lateral incisor had the least accuracy values of 90.72% (82–100).

Positive Predictive Value

The angle between long axis of canine with occlusal plane and sector classification had the highest positive predictive value of 100% followed by 98% for angle between long axis of canine with midline. The least positive predictive value of 89.5% was found for angle between long axis of canine with lateral incisor.

Negative Predictive Value

The angle between long axis of canine with midline, occlusal plane, and lateral incisor had the highest negative predictive value of 100% followed by sector classification with a negative predictive value of 96.7%.

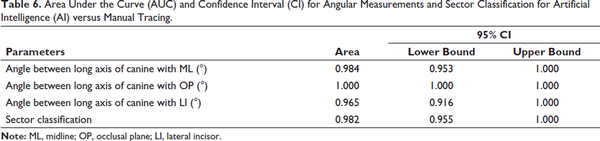

ROC Curves

The values of the ROC curves with 95% confidence intervals were far exceeding 0.9, which indicated that the proposed AI system is able to accurately predict maxillary canine impaction (Table 6 and Figures 10–13).

Receiver Operating Characteristic (ROC) Curve for Angle between Long Axis of Canine with Midline (ML).

Receiver Operating Characteristic (ROC) Curve for Angle between Long Axis of Canine with Lateral Incisor (LI).

Receiver Operating Characteristic (ROC) Curve for Angle between Long Axis of Canine with Occlusal Plane (OP).

Receiver Operating Characteristic (ROC) Curve for Sector Classification.

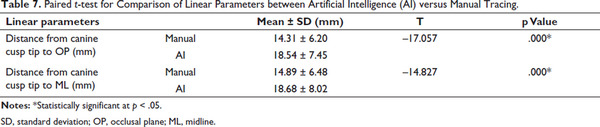

Linear Parameters

The t-test values showed a statistically significant difference for both distance from the canine cusp tip to occlusal plane and midline (p = .000) (Table 7).

Discussion

AI has been widely used in the medical field for image analysis. Commonly used models include artificial neural network (ANN),, CNN,, random forest, support vector machine, etc., The CNNs are the most commonly used AI model. This study used the CNN architecture to test the accuracy of AI model developed using OPGs to predict the favorability of maxillary canine impaction as compared to manual prediction.

Several applications of AI for diagnosing and treatment planning in orthodontics have been published earlier such as cephalometric landmark identification, decisions for orthodontic extractions, anchorage patterns, growth maturation status, and orthognathic surgeries., , , Most of the studies use radiographic diagnostic tools such as periapical radiographs, occlusal radiographs, lateral cephalograms, OPGs, and cone-beam computed tomography (CBCT).

Accuracy and reliability of AI have been investigated on OPGs for various purposes such as tooth detection which reported sensitivity of 99.41% and precision of 99.45%, tooth numbering with sensitivity and specificity of 98% and 99.94%, respectively, caries and restoration detection with an accuracy of 93.6%, identification and classification of dental implants system with an accuracy of 67%. Margot et al. reported that logistic regression model is a useful tool to predict maxillary canine impaction on OPGs.

The results of the present study showed that the performance of the AI model when compared to manual tracing was excellent for all the parameters except for sector classification which had a sensitivity score of 98.5 (93.9–100) and negative predictive value of 96.7%. This is because one of the samples which was actually diagnosed as sector 2 (favorable) in manual tracing was diagnosed as sector 5 (not favorable) in the AI prediction.

With regard to the prediction power for non-favorable canine impactions, the angle between long axis of canine to the lateral incisor had a specificity score of 55 (0.6–100) and a positive predictive value of 89.5% which shows that the parameter is least effective in predicting the actual non-favorable canine impactions as non-favorable. This could be because the image of the lateral incisor was often unclear on OPGs due to superimposition of the image of surrounding structures. Despite the variation in the prediction power among the individual parameters, the overall performance of the proposed AI model was excellent, which was clearly evident in the ROC curves showing high AUC index of above 0.9 for all the angular parameters and sector classification.

The decision-making for the impacted maxillary canine using the linear parameters could not be performed because of lack of normative values in the literature. In addition, linear parameters were used to predict the favorability based on the change in the magnitude of the canine cusp tip in OPGs taken at sequential intervals. Paired t-test was used to check the correlation of the linear parameters between AI model and manual tracing and it was found to be statistically significant indicating a weak correlation between the actual manual tracing and the AI prediction. This could be due to the magnification errors in OPGs that affect the linear measurements.

One major limitation of this study is that the AI model used OPG which is a 2D imaging modality. Future studies can be planned with three-dimensional imaging modalities such as CBCTs collected from various clinicians, which will aid in model training for improved performance. The application of AI algorithm was at the level of decision-making regarding the favorability of eruption in maxillary canine impaction but not at the level of detection of impaction. This was due to the difficulty in defining the region of interest and locating the impacted maxillary canine when it was not present in its usual erupted position. In addition, it was also difficult to define a complete outline including the root of the impacted maxillary canine because of similar gray scales on the OPG. Newer AI models developed to identify impacted maxillary canines in OPGs blended with the current model to predict the favorability will provide a complete AI solution to diagnose and plan the treatment for maxillary canine impaction in the near future.

Conclusion

The proposed AI model had higher accuracy in predicting the favorability of eruption in maxillary canine impactions. The performance was excellent for sector classification, angle between long axis of canine with midline and occlusal plane, whereas it was slightly lower but acceptable for angle between long axis of the canine and lateral incisor. Due to lack of normative values, decision-making on favorability could not be performed with linear parameters.

Declaration of Conflicting Interests The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This research received IOS research grant from the Indian Orthodontic Society Research Foundation (IOSRF) for the academic year 2022.

Bhadrinath S

https://orcid.org/0000-0002-1206-4082

- 1. van der Linden FPGM, Duterloo HS. Development of the Human Dentition. An Atlas. HarperCollins; 1976.

- 2. Bishara SE. Impacted maxillary canines: a review. Am J Orthod Dentofacial Orthop. 1992;101(2):159–171.

- 3. Alqerban A, Jacobs R, Lambrechts P, Loozen G, Willems G. Root resorption of the maxillary lateral incisor caused by impacted canine: a literature review. Clin Oral Investig. 2009;13(3):247–255.

- 4. Bedoya MM, Park JH. A review of the diagnosis and management of impacted maxillary canines. J Am Dent Assoc. 2009;140(12):1485–1493.

- 5. Manne R, Gandikota C, Juvvadi SR, Rama HR, Anche S. Impacted canines: etiology, diagnosis, and orthodontic management. J Pharm Bioallied Sci. 2012;4(Suppl. 2):S234–S238.

- 6. Ericson S, Kurol J. Early treatment of palatally erupting maxillary canines by extraction of the primary canines. Eur J Orthod. 1988;10(4):283–295.

- 7. Warford JH Jr, Grandhi RK, Tira DE. Prediction of maxillary canine impaction using sectors and angular measurement. Am J Orthod Dentofacial Orthop. 2003;124(6):651–655.

- 8. Tuzoff DV, Tuzova LN, Bornstein MM, . Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac Radiol. 2019;48(4):20180051.

- 9. Arik SO, Ibragimov B, Xing L. Fully automated quantitative cephalometry using convolutional neural networks. J Med Imaging (Bellingham). 2017;4(1):014501.

- 10. Wang CW, Huang CT, Lee JH, . A benchmark for comparison of dental radiography analysis algorithms. Med Image Anal. 2016;31:63–76.

- 11. Miki Y, Muramatsu C, Hayashi T, . Classification of teeth in cone-beam CT using deep convolutional neural network. Comput Biol Med. 2017;80:24–29.

- 12. Makaremi M, Lacaule C, Mohammad-Djafari A. Deep learning and artificial intelligence for the determination of the cervical vertebra maturation degree from lateral radiography. Entropy. 2019;21:1222.

- 13. Kim MJ, Liu Y, Oh SH, Ahn HW, Kim SH, Nelson G. Evaluation of a multi-stage convolutional neural network-based fully automated landmark identification system using cone-beam computed tomography-synthesized posteroanterior cephalometric images. Korean J Orthod. 2021;51(2):77–85.

- 14. Ravi I, Srinivasan B, Kailasam V. Radiographic predictors of maxillary canine impaction in mixed and early permanent dentition—a systematic review and meta-analysis. Int Orthod. 2021;19(4):548–565.

- 15. Stivaros N, Mandall NA. Radiographic factors affecting the management of impacted upper permanent canines. J Orthod. 2000;27(2):169–173.

- 16. Power SM, Short MB. An investigation into the response of palatally displaced canines to the removal of deciduous canines and an assessment of factors contributing to favourable eruption. Br J Orthod. 1993;20(3):215–223.

- 17. Alqerban A, Jacobs R, Fieuws S, Willems G. Comparison of two cone beam computed tomographic systems versus panoramic imaging for localization of impacted maxillary canines and detection of root resorption. Eur J Orthod. 2011;33(1):93–102.

- 18. Alqerban A, Storms AS, Voet M, Fieuws S, Willems G. Early prediction of maxillary canine impaction. Dentomaxillofac Radiol. 2016;45(3):1–8.

- 19. Stramotas S, Geenty JP, Petocz P, Darendeliler MA. Accuracy of linear and angular measurements on panoramic radiographs taken at various positions in vitro. Eur J Orthod. 2002;24(1):43–52.

- 20. Eslami E, Barkhordar H, Abramovitch K, Kim J, Masoud MI. Cone-beam computed tomography vs conventional radiography in visualization of maxillary impacted-canine localization: a systematic review of comparative studies. Am J Orthod Dentofacial Orthop. 2017;151(2):248–258.

- 21. Choi HI, Jung SK, Baek SH, . Artificial intelligent model with neural network machine learning for the diagnosis of orthognathic surgery. J Craniofac Surg. 2019;30(7):1986–1989.

- 22. Kunz F, Stellzig-Eisenhauer A, Zeman F, Boldt J. Artificial intelligence in orthodontics: evaluation of a fully automated cephalometric analysis using a customized convolutional neural network. J Orofac Orthop. 2020;81(1):52–68.

- 23. Baksi S, Freezer S, Matsumoto T, Dreyer C. Accuracy of an automated method of 3D soft tissue landmark detection. Eur J Orthodontics. 2021;43(6):622–630.

- 24. Kök H, Acilar AM, İzgi MS. Usage and comparison of artificial intelligence algorithms for determination of growth and development by cervical vertebrae stages in orthodontics. Prog Orthod. 2019;20(1):41.

- 25. Schwendicke F, Golla T, Dreher M, Krois J. Convolutional neural networks for dental image diagnostics: a scoping review. J Dent. 2019;91:103226.

- 26. Khanagar SB, Al-Ehaideb A, Vishwanathaiah S, . Scope and performance of artificial intelligence technology in orthodontic diagnosis, treatment planning, and clinical decision-making—a systematic review. J Dent Sci. 2021;16(1):482–492.

- 27. Jung SK, Kim TW. New approach for the diagnosis of extractions with neural network machine learning. Am J Orthod Dentofacial Orthop. 2016;149(1):127–133.

- 28. Abdalla-Aslan R, Yeshua T, Kabla D, Leichter I, Nadler C. An artificial intelligence system using machine-learning for automatic detection and classification of dental restorations in panoramic radiography. Oral Surg Oral Med Oral Pathol Oral Radiol. 2020;130(5):593–602.

- 29. Benakatti VB, Nayakar RP, Anandhalli M. Machine learning for identification of dental implant systems based on shape—a descriptive study. J Indian Prosthodont Soc. 2021;21(4):405–411.

- 30. Margot R, Maria CL, Ali A, Annouschka L, Anna V, Guy W. Prediction of maxillary canine impaction based on panoramic radiographs. Clin Exp Dent Res. 2020;6(1):44–50.

- 31. Sajnani AK, King NM. Early prediction of maxillary canine impaction from panoramic radiographs. Am J Orthod Dentofacial Orthop. 2012;142(1):45–51.

- 32. Richardson A. An investigation into the reproducibility of some points, planes, and lines used in cephalometric analysis. Am J Orthod Dentofacial Orthop. 1966;52(9):637–651.