Introduction

The aim of the study was to investigate the role of facial expressions in body language comprehension. We considered the issue of whether a deficit in face coding could affect the coding of body language in healthy controls by using the ERP paradigm of semantic violation. Recent electrophysiological studies (, ) have shown how it is possible to investigate the semantic comprehension of body language signals by contrasting the perception of mimics that are congruently or incongruently labeled. Although it has long been known that facial expressions convey a broad and rich spectrum of information about the others’ emotional and mental states, recent findings demonstrate that body language alone can also convey the emotional states of others (; ; ) being, e.g. able to reveal a deceptive or threatening attitude (; ). Neuroimaging literature (e.g. ) has shown that, the initial identification of faces and bodies as such may occur in distinct areas of the brain (among others: the fusiform face area [FFA]; ) and the extrastriate body area (), the two types of information are processed conjointly and combined especially at the level of the posterior superior temporal sulcus (; ). Notwithstanding that, not much is known about the relevance (i.e. the relative weight) of facial expressions as compared with bodily expressivity. Although emotions conveyed by bodily expressions are quite easily recognizable (), face obscuration reduces pantomime comprehension in typically developing (TD) subjects, as opposed for example to patients with bilateral amygdala damage (). In addition, when facial expressions are incongruent with bodily expressions (for example of anger) response times are much slower during a matching-to-sample task in TD controls (), thus suggesting that bodily expressions are better recognized when accompanied by a face that expresses the same emotion ().

In another study, investigated the effect of face blurring during body language processing in a blind-sight patient to whom body images were presented in the blind field. fMRI data relative to presentation of whole body images (happy, neutral) with the face blurred showed an effect of emotional content in brain activation notwithstanding cortical blindness thus suggesting that body language processing is performed automatically and unconsciously and does not rely uniquely on facial expressions. Similarly, investigated the recognition of emotional expressions from the entire body by manipulating the congruence between body and facial expressions. The results indicate a strong influence of incongruent body expressions on the recognition of facial expressions. Consistently, in an ERP study by , the authors showed that the observers, who were asked to judge a facial expression, were strongly influenced by emotional body language (EBL) (see also: ).

The purpose of this study was 2-fold, as follows: (i) to investigate the role played by facial expressions in body-language comprehension by depriving half of the stimuli from the facial information (via face blurring) and comparing brain activity and behavioral performance with the two types of information; (ii) to investigate the effects, and possible interactions, of face blurring and the sex of the viewer on the ability to understand body language.

Here it was investigated whether face obscuring affected semantic comprehension of gestures, through the analysis of both N400 amplitude (associated to language/gesture ‘incomprehension’) and late positivity (LP), associated to stimulus comprehension (; ) and decision-making processes (). In this vein, a larger N400 and a smaller positivity would index a difficulty in integrating semantic information and in making a decision about gesture/caption congruence. Overall, by manipulating the congruence of pictures and captions, we expected to find an enhanced anterior N400 in response to incorrect associations, which would match previous findings (; ; , ; ; , ,). According to Proverbio and Riva (2009), the N400 would reflect the activation of brain mechanisms that automatically process biological actions as meaningful units by means of visuomotor mirror neurons (; ). Therefore, we hypothesized that N400 would be modulated by face presence/absence and would reflect a body-language comprehension mechanism in the two genders, as a function of the facial information provided.

Indeed, previous ERP investigations have shown sex differences in the amplitude of LP and N400 components during processing of social information. For example , ) found larger N400 amplitudes in female as compared with male students in response to incongruent body signals (with respect to a previous verbal description) and interpreted their results in the light of a greater female sensitivity to social information. Other findings by and Proverbio et al. (,) reported differences across male and female participants in the activation of the action understanding system, the superior temporal sulcus (STS), and the ventral premotor cortex during the observation of cooperative vs affective human interaction. Other gender differences have been shown with regard to the amplitude of LP component (indexing attention to social information) by several studies involving perception of faces (Proverbio et al., ,; Burdwood and Simons, 2016) or bodies ().

In depriving the pictures of facial information we simulated the lack of information occurring in face-avoidant perceivers with social deficits (e.g. autistic individuals). A number of neuroimaging studies have revealed atypical patterns of brain activation in individuals with autism spectrum disorder (ASD) when processing faces. The atypical patterns include fusiform hypoactivation, abnormal development and increased activation of the amygdala, which is a part of the brain that detects threats and is responsible for fear perception and avoidant behavior (; ). Furthermore, disrupted structural connectivity and weaker functional connectivity between these areas in ASD individuals (; ) is thought to contribute to symptoms such as impaired perceptual and affective processing of social stimuli (e.g. gestures and body language). It is also known that, in autistic individuals, there is a tendency to avoid the gaze and not to monitor the eye region and the face in general (). One of the deficits associated with this syndrome is a reduced ability to understand the emotional and mental state of others (also called theory of mind deficit). For example, Wallace and coauthors compared adults with ASD to TD control subjects in two computerized tests of face and object discrimination (). Although ASD individuals showed impairments in all tests of face processing, they showed intact object processing.

By observing the behavior and brain responses of female and male individuals in our task, we investigated the effects of our manipulation in individuals with varying amounts of autistic/empathic traits, according to the ‘Empathizing-systemizing’ theory (), and in particular according to the ‘extreme male brain theory’. This theory has been advanced to account for sex differences in empathic traits, and it may be heuristically useful, although not universally shared (e.g. see the critical paper by ). We also expected to find behavioral and/or electrophysiological indices suggesting a sex difference in the ability to decode body language, based on predictions found in the literature (e.g. ; ,; ).

Materials and methods

Participants

Thirty-one Italian University students (15 males and 16 females) ∼24-years old (s.d. = 3.5 years) took part in the study. The age range was 18–25 years. Exclusion criteria included known psychiatric or neurological illness, history of epilepsy or head trauma and drug/alcohol abuse assessed through self-report. All participants were right-handed with normal or corrected vision. The handedness was assessed through the administration of the Italian version of the Edinburgh Inventory Questionnaire. The data for five participants were discarded due to EEG artifacts or technical problems, leading to 26 subjects (13 males and 13 females, mean age = 24 years, s.d. = 2.8, median = 24) for EEG averaging, and the behavioral analysis was carried out on the same sample of 26 participants. Normal distribution of data was assessed by means of the Shapiro–Wilk test (P > 0.89). There was no age difference between male and female groups, as assessed by a Chi-Square test (P = 0.8186).

The experiment was conducted with the approval of the Ethical Committee of University of Milano-Bicocca and in compliance with APA ethical standards for the treatment of human volunteers (1992, American Psychological Association). Informed written consent was obtained from all subjects. All participants received academic credit for their participation. All experiments were performed in accordance with relevant guidelines and regulations.

Stimuli

In total, 800 pictures were selected from a wider set of 1122 and were validated as described in . Each color photo showed half of the body of a female or male actor wearing a black sweater and photographed against a white wall. Pictures depicted 6 different actors (3 males and 3 females) performing 187 different gestures and delivering precise semantic information about speakers’ plans, desires, motivations, attitudes, beliefs and thoughts (such as: ‘I am getting out of here’, ‘Let’s keep calm’, ‘it’s cold’, ‘it was so tall’, ‘he is lying’, ‘it happened a long time ago’, ‘there were so many’, ‘let’s have a drink’). Gestures were validated for their clarity and comprehensibility for an Italian speaker by an independent group of 18 judges. To test the validity of the pictures, they were presented to a group of 18 judges (8 males, 10 females) belonging to the same statistical cohort. They were asked to evaluate the coherence between the gesture shown in each picture and the verbal description provided, on a scale from 0 to 2 (0 = not appropriate, 1 somewhat appropriate, 2 = very appropriate). Following this procedure, 19 gesture categories were discarded for the following reasons:

gestures received low validation scores (between 0.30 and 1.70);

gestures were not recognized by all subjects because only used in restricted Italian geographic areas;

gesture comprehension was reduced by the static property the photo

At the end of this process, 800 stimuli were finally selected. The stimulus luminance varied from 3.02 cd/m2 to 35.6 cd/m2, with an average luminance of 13.66 cd/m2 for congruent stimuli and of 13.58 cd/m2 for incongruent stimuli. Stimuli were therefore isoluminant across classes, as proved by an analysis of variance (ANOVA) (F2, 798 = 1.02; P = 0.86). Stimulus size was 371 × 278 pixels, corresponding to 8° 30’ × 6° 30’ of visual angle.

The gestures could be of three types: iconic (visual representations of referential meaning), deictic (gestures that indicate real, implied or imaginary persons, objects, directions) or emblematic (symbolic and conventional gestures that represent a standard meaning; e.g. the index and middle finger forming a ‘V’ for ‘peace’ or thumbs up for ‘good’). Each gesture was associated with a short verbal label, which could be congruent or incongruent with the image itself. The verbal descriptions were presented in the Times New Roman font and were written in yellow on a black background. Each verbal description was presented in short lines (one to three words per line) for 700 ms at the center of the PC screen with an inter-stimulus interval (ISI) that ranged from 100 to 200 ms and were followed by the corresponding picture. The outer background was light gray. The verbal description relative to congruent and incongruent pictures (in Italian language) was accurately matched for length (no. of words and no. of letters). Congruent labels were about 2.74 words long (the number of words varying from 1 to 7), whereas incongruent labels were about 2.78 words long. Furthermore, congruent labels were long on average 12.12 letters, as opposed to 12.19 letters of incongruent labels (the number of letters varying from 2 to 32 for both categories). Two ANOVAs were carried out on word and letter length values across the congruent and incongruent categories, reporting absolutely no difference among categories.

Stimuli were balanced so that each individual actor, gender, and gesture type appeared in equal numbers across classes (congruent vs incongruent stimuli).

In half stimuli (carefully matched across categories and typologies), the face of the character was obscured via a blurring procedure (a condition called Faceless). See some examples in Figure 1. In summary, the stimulus set consisted of 400 standard pictures that showed a face (200 congruent and 200 incongruent) and 400 pictures with a blurred face (200 congruent and 200 incongruent).

Fig. 1

Examples of standard and faceless pictures, congruent or incongruent with their caption. Faceless version of the gestures was used to assess the specific role of facial information in body language understanding, both in females and in males. Captions were in Italian in the study. They have been translated here for the reader.

Procedure

Participants were seated comfortably in a dark, acoustically and electrically shielded test area facing a high-resolution screen located 100 cm from their eyes. They were instructed to gaze at the center of the screen, where a small cross served as a fixation point, and to avoid any eye or body movements during the recording session. The fixation point fell around the upper thoracic area of characters (this allowed participants to keep their eyes fixed on the central point while monitoring at the same time facial expressions and body language of actors. Stimuli were presented in a random order at the center of the screen in 12 runs of 66–70 trials that lasted ∼4 min each. Each run was preceded by a warning signal (a red cross) presented for 700 ms.

The sequence presentation order varied across subjects. Each sequence had a balanced number of congruent and incongruent stimuli, presented in a randomized manner, and each session began with three warning stimuli (‘READY’, ‘SET’ and ‘GO’) lasting 700 ms and followed by a cross (+) indicating the fixation point. Each sentence was presented for 700 ms and was followed by a random ISI of 100–200 ms. Picture duration was 1200 ms, and the ISI was 1500 ms. The experimental session was preceded by a training session that included two runs, one for each hand. Stimuli for the training session, belonging to the congruent and incongruent categories, were different from those used for the experimental session, being selected among the pictures discarded during stimulus validation.

The task required participants to decide as accurately and quickly as possible about the congruence between the gesture and its caption. To respond that a pair was congruent, participants pressed a response key with their index finger (of the left or right hand), and to respond that a pair was incongruent, they pressed a response key with their middle finger (of the left or right hand). Previous literature has shown no significant difference in response speed between the two fingers (index vs middle) in button press tasks (). A choice RTs instead of a Go/no-go or target detection paradigm was used here to avoid that negative readiness potentials related to action programing and execution affected only selected ERP waveforms, thus altering or masking the effect of experimental variables. The hand used was alternated during the recording session. Hand order and task conditions were counterbalanced across subjects. At the beginning of each session, participants were reminded which response hand would be used. The sequences with and without faces alternated in runs of two so that the subject could perform the same types of sequences with both hands.

EEG recording and analysis

The electroencephalogram (EEG) was continuously recorded using the software package EEvoke (ANT Software, Enschede, The Netherlands) from 128 scalp sites at a sampling rate of 512 Hz using tin electrodes mounted in an elastic cap (Electro-Cap) and arranged according to the international 10–5 system (). Horizontal and vertical eye movements were also recorded. Average mastoids served as the reference lead. The EEG and Electro-oculogram were amplified with a half-amplitude band pass of 0.016–100 Hz. Electrode impedance was maintained below 5 kΩ. EEG epochs were synchronized with the onset of gesture presentation. A computerized artifact rejection criterion was applied before averaging to discard epochs in which eye movements, blinks, excessive muscle potential or amplifier blocking occurred. The artifact rejection criterion was a peak-to-peak amplitude exceeding 50 μV, and the rejection rate was ∼5%. EEG was time-locked to body-language picture presentation. ERPs were therefore relative solely to static gestures, not to their verbal description. ERPs were averaged off-line from −100 to 1200 ms after stimulus onset and analyzed via software EEProbe (ANT Software, Enschede, The Netherlands. After averaging ERPs were further filtered (band-pass = 0.016–40 Hz). ERP components were identified and measured with reference to the average baseline voltage over the interval of −100 to 0 ms at the sites and latencies at which they reached their maximum amplitudes. EEG epochs associated with an incorrect behavioral response were excluded.

The peak latency and amplitude of the N170 response were measured at occipito/temporal sites (P7, P8, PO7, PO8, PPO9H, PPO10H) between 140 and 200 ms. The mean area amplitude of posterior N400 was measured at centro/parietal sites (P1, P2, CP1 and CP2 sites) between 450 and 550 ms. The mean area amplitude of the anterior N400 was measured at frontal sites (F1, F2, FC1 and FC2) between 450 and 550 ms. Lastly, the amplitude of ‘LP’ was measured over the fronto/central midline sites (FCZ, FZ and CZ) between 600 and 800 ms.

ERP data were subjected to five-way multifactorial repeated-measures ANOVA with one between-subject factor (sex: male or female) and four within-subjects factors: face presence (standard, faceless), condition (congruent, Incongruent), electrode (dependent on the ERP component of interest) and hemisphere (left, right). Multiple comparisons of means were performed with Tukey’s post hoc test.

Topographical voltage maps of the ERPs were made by plotting color-coded isopotentials obtained by interpolating voltage values between surface electrodes at specific latencies. Standardized low-resolution electromagnetic tomography (sLORETA; ) was performed on the difference wave obtained by subtracting the ERPs for faceless pictures from the ERPs for standard pictures at the N170 level. LORETA is a discrete linear solution to the inverse EEG problem, and it corresponds to the 3D distribution of neural electric activity that maximizes similarity (i.e. maximizes synchronization) in terms of orientation and strength between neighboring neuronal populations (represented by adjacent voxels). In this study, an improved version of standardized weighted low-resolution brain electromagnetic tomography was used; this version incorporates a singular value decomposition-based lead field weighting method (i.e. swLORETA, ). The source space properties included a grid spacing (the distance between two calculation points) of 5 points and an estimated signal-to-noise ratio of 3, which defines the regularization (higher values indicating less regularization and therefore less blurred results). The use of a value of three to four for the computation of SNR in the produces superior accuracy of the solutions for any inverse problems assessed. swLORETA was performed on the group data (grand-averaged data) to identify statistically significant electromagnetic dipoles (P < 0.05) in which larger magnitudes correlated with more significant activation. The data were automatically re-referenced to the average reference as part of the LORETA analysis. A realistic boundary element model (BEM) was derived from a T1-weighted 3D MRI dataset through segmentation of the brain tissue. This BEM model consisted of one homogeneous compartment comprising 3446 vertices and 6888 triangles. Advanced source analysis (ASA) employs a realistic head model of three layers (scalp, skull and brain) created using the BEM. This realistic head model comprises a set of irregularly shaped boundaries and the conductivity values for the compartments between them. Each boundary is approximated by a number of points, which are interconnected by plane triangles. The triangulation leads to a more or less evenly distributed mesh of triangles as a function of the chosen grid value. A smaller value for the grid spacing results in finer meshes and vice versa. With the aforementioned realistic head model of three layers, the segmentation is assumed to include current generators of brain volume, including both gray and white matter. Scalp, skull, and brain region conductivities were assumed to be 0.33, 0.0042 and 0.33, respectively. The source reconstruction solutions were projected onto the 3D MRI of the Collins brain, which was provided by the Montreal Neurological Institute. The probabilities of source activation based on Fisher’s F-test were provided for each independent EEG source, the values of which are indicated in a so-called ‘unit’ scale (the larger, the more significant). Both the segmentation and generation of the head model were performed using the ASA software program advanced neuro technology (ANT, Enschede, the Netherlands).

Reaction times (RTs) that exceeded the mean value ±2 s.d. were discarded, which resulted in a rejection rate of 2%. Error and omission rate percentages were converted to arcsine values and used for ANOVA. Because percentage value do not exhibit homoscedasticity (e.g. ), which is necessary for ANOVA, we transformed the data by taking arcsine values. In fact, the distribution of percentages is binomial, whereas arcsine transformation of the data makes the distribution normal. Both RTs and error percentages were subjected to separate multifactorial repeated-measures ANOVA analyses with one between-subject factor (sex: male or female) and three within-subjects factors: face presence (face, no-face); condition (congruent, incongruent); and response hand (left or right).

The Greenhouse-Geisser correction was applied to compensate for possible violations of the sphericity assumption associated with factors which had more than two levels. The epsilon (ε) values and the corrected probability levels (in case of epsilon < 1) are reported. Normality of distribution of data was assessed by means of the Shapiro–Wilk test (P > 0.95). Please see the Supplementary material for a detail report of all statistical data.

Results

Behavioral results

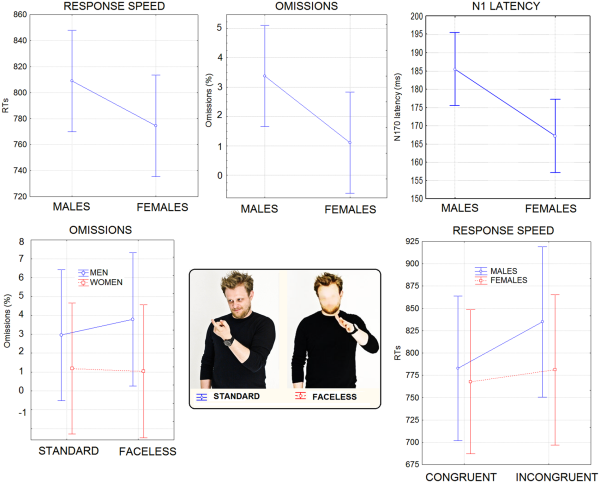

Response speed . The ANOVA yielded significant results for the condition factor (P < 0.001), with RTs being much faster for congruent (775 ms) than incongruent stimuli (808 ms). The interaction of sex × congruence (P = 0.05) and relative Tukey post hoc comparisons showed slower RTs in males (783 ms) than females (767 ms) to congruent stimuli, and a cost for incongruent (835 ms) vs congruent stimuli (783 ms) only in men (see Figure 2, lower row).

Fig. 2

(Top row) Mean RTs (in ms), omission percentages and N1 latency (in ms) recorded for the two sexes regardless of stimulus type. (Lower row) Mean percentage of omissions as a function of face presence, in the two sexes. It can be appreciated the lack of costs found for female participants for faceless stimuli. On the right are shown response times (in ms) recorded in male and female participants as a function of stimulus congruence. Again, no cost for the incongruent condition was found in female participants.

Errors . Overall, the raw error percentage was ∼14.66%. Errors consisted of incorrect categorizations. The ANOVA showed the significance of the face presence factor (P < 0.0001), indicating lower accuracy for faceless pictures than standard pictures. The condition factor also yielded significance (P < 0.0001), with more errors for congruent than incongruent stimuli. Furthermore, the interaction of condition × face presence (P < 0.0001) and relative post hoc comparisons indicated lower accuracy for faceless pictures (20.1%) than standard pictures (15.4%) in the congruent condition only.

Omission rate . The raw omission rate was 2.24%. Sex tended to show significance (P = 0.06) with men making more omissions (3.37%) than women (1.1%). Sex × face presence (P < 0.035) showed that men made more omissions for faceless pictures than stimuli including faces, but no cost was associated with face blurring in female participants (see Figure 2). The behavioral and electrophysiological results are summarized in Table 1, and in the Supplementary Material.

Electrophysiological results

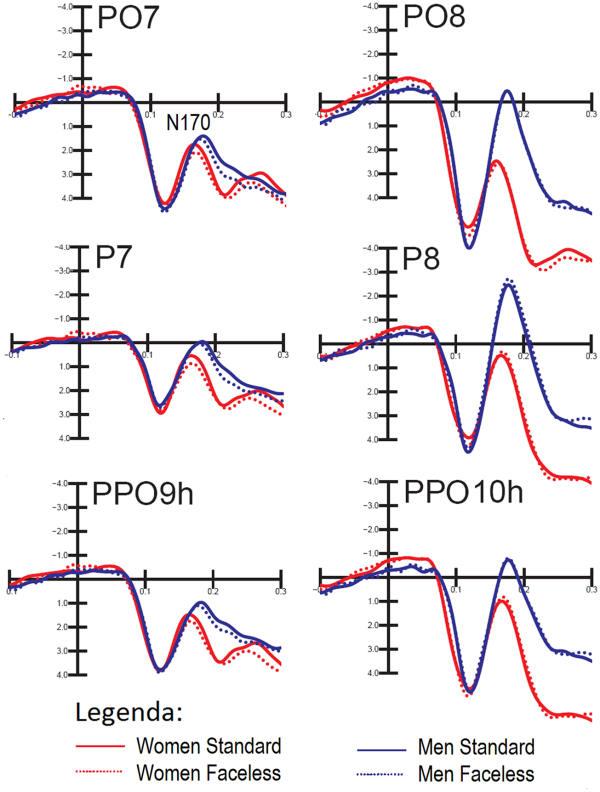

Figure 3 shows the grand average waveforms recorded from the left and right occipito/temporal, posterior temporal and lateral occipital sites in response to standard and faceless stimuli (regardless of congruence) in female and male participants.

Fig. 3

Grand-average ERPs recorded at occipito/temporal, posterior temporal and lateral occipital sites in response to standard and faceless stimuli (regardless of congruence) in female and male participants.

N170 latency. The ANOVA performed on N170 latency showed significant results for the sex factor (P < 0.02) with a longer N170 latency observed in males (185 ms) than in females (167 ms), as shown in Figure 2 (upper right).

N170 amplitude. Overall, the N170 amplitude was greater at posterior temporal sites (P < 0.0001). Furthermore, it was generally greater on the right than in the left hemisphere, as shown by the hemisphere factor (P < 0.001). The interaction of sex × electrode × hemisphere strongly tended to significance (P = 0.062). Post hoc comparisons (P < 0.001) showed that, while N170 was larger on the right than the left hemisphere in men, it was bilateral over occipito/temporal sites in women.

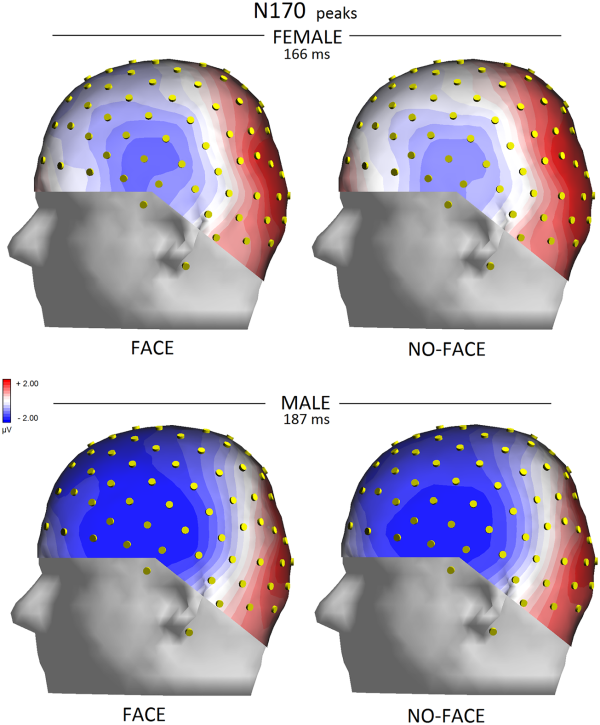

The significant interaction of condition × electrode (P < 0.05) indicated a greater N170 for congruent pictures than incongruent pictures at all sites except for occipito/temporal sites. The interaction of face presence × electrode × hemisphere (P < 0.04) and relative post hoc comparisons (P < 0.001) showed how N170 was reduced by the lack of a face in the pictures, especially over the left occipito/temporal sites, compared with when the face was visible. This effect is shown in topographical maps in Figure 4.

Fig. 4

Left view of isocolour topographical maps obtained by plotting surface potentials recorded from various sites at peak of N170 on a 3D spherical head.

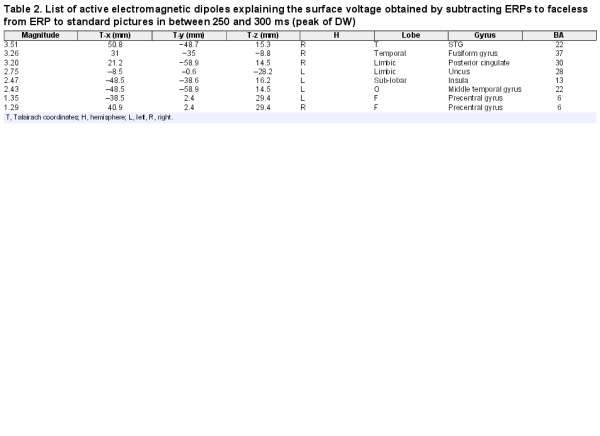

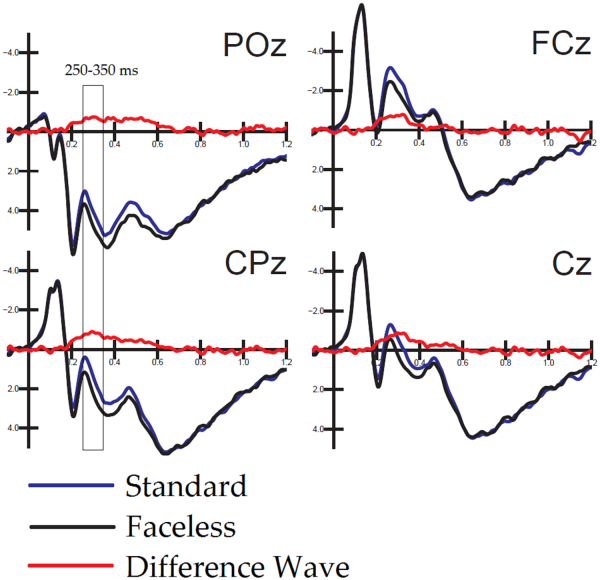

To identify the neural generator of this activity linked to face processing in standard, as opposed to faceless, pictures, a swLORETA solution was computed on the difference wave obtained by subtracting the ERP for faceless pictures from the ERPs for standard pictures (which are displayed in Figure 5) in the latency range corresponding to the maximum peak of differential activity (250–300 ms). Table 2 shows a list of active sources as a function of magnitude (in nAm). The most important differences between the two conditions was the activation of the right superior temporal gyrus (STG) and the right FFA as well as that in limbic regions (such as the cingulate cortex and insula), as evident from the LORETA images depicted in Figure 6.

Fig. 5

Overlapped to ERPs elicited by standard and faceless pictures are difference-waves (obtained as a subtraction from them) and recorded at midline occipitoparietal, centroparietal, central and frontocentral sites.

Fig. 6

Sagittal (right), axial and coronal brain sections showing the location and strength of electromagnetic dipoles explaining the surface difference-voltage obtained by subtracting ERPs to faceless from ERPs to standard pictures in the 250–300 ms latency range, corresponding to the peak of DW. L, left; R, Right; A, anterior; P, posterior; fSTG, face superior temporal gyrus; FG, fusiform gyrus, also known as FFA.

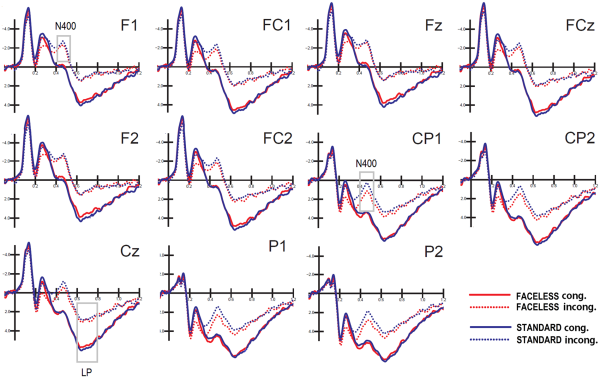

Figure 7 shows the grand average waveforms recorded from left and right frontal, fronto/central and parietal sites, and midline frontal, fronto/central and central sites in response to standard and faceless stimuli (as a function of their congruence). The figure shows a large N400 deflection in response to incongruent pictures, as opposed to a LP for congruent pictures.

Fig. 7

Grand-average ERPs recorded from left and right frontal, frontocentral and parietal sites, and midline frontal, frontocentral and central sites in response to standard and faceless stimuli, when congruent or incongruent with the verbal description.

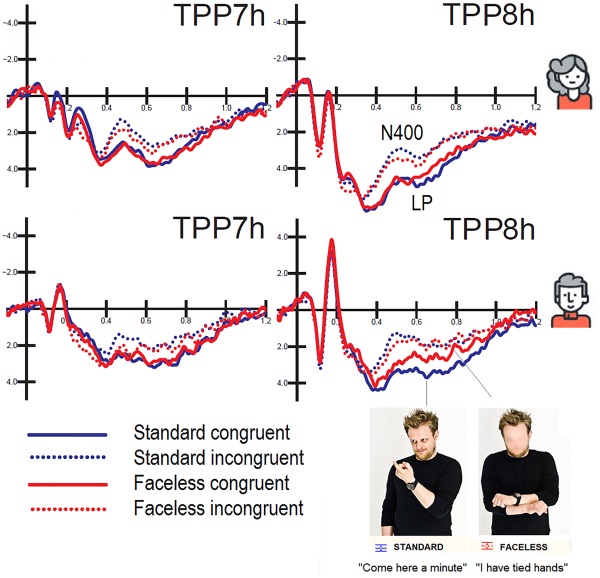

Posterior N400 (450 – 550 ms). The ANOVA performed on N400 mean area yielded significance for the face factor (P < 0.03); there were smaller responses to faceless pictures (3.82 µV) than standard pictures (3.35 µV). The ANOVA also yielded significance for condition (P < 0.001), with a larger N400 for incongruent (2.22 µV) than congruent (4.95 µV) stimuli. The N400 was generally greater over the left (3.36) than the right (3.81 µV) hemisphere, as demonstrated by the significance of hemisphere factor (P < 0.002). The further interaction of face × condition (P < 0.02) and relative post hoc comparisons (P < 0.001) showed that N400 was smaller for faceless pictures (2.61 µV) than for standard pictures (1.83 µV) when they were incongruent (see Figure 8).

Fig. 8

Grand-average ERPs recorded at left and right temporo/parietal sites in women (top) and men (bottom) as a function of picture congruence and face presence. It is noticeable a cost for faceless vs standard congruent pictures only in men in the form of a reduction in the amplitude of LP response.

Anterior N400 (450 – 550 ms). The ANOVA performed on anterior N400 area yielded significance for the condition factor (P < 0.001) with greater N400 amplitudes in response to incongruent stimuli (−1.08 µV) than congruent stimuli (1.53 µV). The significant interaction of face presence × condition (P < 0.04) and relative post hoc comparisons (P < 0.001) showed that this effect was more pronounced for standard stimuli, as displayed in Figure 7. The further interaction of face × electrode (P < 0.004) showed that N400 was larger for standard stimuli than faceless stimuli at FC1 and FC2 sites. The interaction of condition × hemisphere (P < 0.002) showed larger N400s over the left hemisphere than the right hemisphere for congruent stimuli.

LP (600 – 800 ms). Overall, LP was greater at central midline sites as opposed to frontal midline sites, as shown by the electrode factor (P < 0.001). The ANOVA performed on LP amplitude yielded significance for the congruence factor (P < 0.001) with larger amplitudes for congruent (5.24 µV) than incongruent stimuli (2.24 µV). The interaction of face presence × condition (P < 0.04) and relative post hoc comparisons (P < 0.001) indicated that LP was larger for congruent stimuli than incongruent stimuli, but especially when the face was visible. The interaction of sex × electrode (P < 0.02) showed that LP was overall larger in the female (3.67 µV) than male brain, especially at the Fz site (1.97 µV). The further interaction of sex × electrode x condition (P < 0.003) and relative post hoc comparisons (P < 0.001) indicated that the LP was much larger for congruent stimuli in the female brain (regardless of face presence, see Figure 8): Females: 5.232 µV; Males: 3.24, at the Fz site.

Discussion

The purpose of this study was to investigate the role played by facial expressions in the comprehension of mimics and gestures. Of specific interest was the existence of possible gender differences in the ability to comprehend body language, especially with regard to the presence/lack of facial cues.

Behavioral data showed several effects of the sex of the viewer on body-language processing. Quicker response times were observed in women than in men to congruent stimuli, and a cost for incongruent vs congruent stimuli was found only in men. Furthermore, men made more omissions for faceless pictures than stimuli including faces but no cost was associated with face blurring in female participants. Therefore pantomime comprehension was faster and more accurate in women than men when the information was incongruent or lacked facial details.

These findings might be interpreted in light of the current literature suggesting a greater ability for decoding body language in women than in men (e.g. ,; ). Indeed, women show an advantage in decoding emotional non-verbal cues both in the auditory (; ) and visual modality ().

Similarly to what was found in previous literature (i.e. Wu e and Coulson, 2005, 2007; , ), as well as in our previous study on EBL (), gestures congruent with their caption had faster responses (RTs) and were recognized better (accuracy data) than those associated with an incongruent caption. This may indicate that incongruent stimuli require additional processing time and computing resources compared with congruent ones. Furthermore, accuracy analysis showed a significant effect for face presence. Participants committed fewer errors when faces were visible compared with when faces were obscured. This effect could be due to the lack of facial affective information conveyed by mimicry, which would make bodily gestures more ambiguous and harder to understand. Interestingly, the percentage of hits was not reduced by face blurring in women, only in men.

Electrophysiological data showed an early effect of face presence and stimulus congruence on the amplitude of the N170 response. N170, typically peaking at 140–200 over the right occipito/temporal scalp areas, is involved in face configuration processing (; ; ), face identity coding (; ) and facial expression coding (; ). In our study, N170 latency was found to be 18 ms later in men than in women. This result is consistent with other evidence in the ERP literature on face processing () and suggests a possible specialization for faces as sensory signals in the female brain (; ; ,; ). The analysis of N170 amplitude showed a general hemispheric asymmetry in scalp distribution, as predicted by the literature (). Interestingly, a sex difference in hemispheric asymmetry indicated a bilateral activation of N170 on P07-PO8 sites in women, as opposed to the right-lateralization in men. These results support the hypothesis advanced by regarding sexual dimorphism in the functional organization of face-processing in terms of hemispheric asymmetry (; 2010,a,b; ), hemispheric specialization (; ,), callosal transmission speed hemispheric transfer time (IHTT) and directional asymmetry (,; ), processing efficiency (; ) and perceptual preference (; ,; ; ; ). For example, in an ERP study (,) where IHTT was measured by considering N170 latency for faces presented in the contralateral vs ipsilateral field, the IHTT was found to be longer and asymmetric in men (with a delay in the RVF/LH -> LVF/RH direction) and early and symmetrical in women.

In this study, the N170 showed greater amplitude in response to congruent than incongruent pictures. This piece of evidence indicates an early semantic-conceptual processing of perceptual congruence between 140 and 200 ms. Consistent with this observation, provided evidence of an early P115 effect of the congruence-incongruence of emotional and bodily cues in a paradigm in which pictures of fearful and angry faces and bodies were used to create face–body compound images, with either matched or mismatched emotional expressions. However, in the same study, there was a lack of this effect at the N170 level.

In our study, N170 was modulated by the presence or absence of facial information, but the effect was significant only over the left hemisphere. Indeed, the observation of the time course of the difference wave computed by subtracting the ERP for faceless pictures from the ERPs for standard pictures showed that the maximal difference between conditions occurred at ∼250–300 ms post-stimulus latency, and it was much smaller at the N170 peak.

The LORETA source reconstruction performed on this differential activity showed that the lack of facial information mostly affected the activation of the right STG (BA22) and the right fusiform gyrus (BA37). The former is mostly involved in the affective processing of body language () and biological motion, but it is also involved in face processing (fSTS ). In contrast, the fusiform gyrus (BA37) has been characterized as the FFA (), and it is also involved in body processing (fusiform body area, ; ). Both areas have been reported to show insufficient/atypical activation in ASD individuals (; ; ; ; ) during processing of social information. This piece of information indicates the validity of our stimulus battery as a tool for assessing/diagnosing the presence of a deficit in social cognition due to face avoidance or abnormal face–eye fixation.

Source reconstruction data also showed that face blurring reduced the activation of limbic regions (posterior cingulate cortex, uncus and insula) relevant to the processing of visual social information (; ; ). The same circuits have been described as atypically and insufficiently activated in adolescents with ASD during facial affect processing (Leung et al., 2014). Abnormal functional connectivity involving these areas has also been reported in autistic individuals (e.g. ). The reduced premotor activation (BA6) suggests that the lack of facial cues had a negative impact on the processing of the ‘Action Observation System’ (; ) engaged in understanding body language (e.g. ; ; ; ; ,). In a similar study showed that incongruent affective behavior elicited a large anterior N400 deflection whose neural generators included the uncus and the cingulate cortex, social visual areas (STS, FFA extra-striate body area (EBA)) and premotor cortex (BA6), which is compatible with similar neuroimaging data on body-language understanding (e.g. ; ; ; ; ; ).

Later ERP components showed massive effects for face presence and stimulus congruity. Both posterior and anterior N400 (450–550 ms) were much larger when the gesture did not fit with their caption, which is in line with previous ERP evidence (, ). They were also larger for standard than faceless stimuli, as if face presence made incongruity more evident. The late-positive component (LP, 600–800 ms) showed the opposite behavior: it was larger in response to congruent stimuli, possibly because it includes cognitive updating and task-related decisional processes () as well as the link between perceptual processing and response preparation (). Very interestingly, LP showed several sex differences: it had greater amplitude in women than men (which is possibly associated with faster RTs in women than men) at midline frontal sites, especially for congruent stimuli. Furthermore, LP response was not reduced in amplitude for faceless stimuli in females. These data fit very well with the behavioral pattern of the results, showing no cost in performance (hit rate) for female observers making decisions about faceless pictures.

Conclusions

Overall, the results suggest that females might be more resistant to the lack of facial information or better at understanding body-language in face deprived social information. Brain potentials showed slower perceptual processing of gestures in males than females as indexed by N170 response; larger amplitude of LP potentials reflecting gestures comprehension in the female brain, along with a cost for faceless stimuli only in males. Face obscuration did not affect accuracy in women as reflected by omission percentages, nor reduced their cognitive potentials, thus suggesting a better comprehension of face deprived pantomimes. It is interesting to compare these findings with the data according to which autistic or ASD individuals of which roughly one in five are males () would avoid face or gaze processing during perception of social scenes (; ), which would also explain their deficitary social processing.

Therefore, the speculative hypothesis can be advanced that individuals showing face/gaze avoidant behavior (such as ASD individuals) would not show a benefit for the standard vs the faceless condition at both the behavioral and electrophysiological levels, but this more than certainly deserves a direct experimental investigation.

One of the limitations of this study is that no empathy questionnaire was administered to our male and female participants. We therefore ignore whether the more empathic individuals were also better at understanding the meaning of gestures, or more resistant to a lack of facial information.

Supplementary data

Supplementary data are available at SCAN online.

Funding

Funded by 2013-ATE-0037 9928 grant ‘How face avoidance affects action understanding and affective processing in Autism’ from University of Milano-Bicocca.

Conflict of interest. None declared.

References

- Adolphs R., Tranel D., Damasio A.R. (2003). Dissociable neural systems for recognizing emotions. Brain and Cognition, 52(1), 61–9.

- Andrew J., Cooke M., Muncer S.J. (2008). The relationship between empathy and Machiavellianism: an alternative to empathizing-systemizing theory. Personality and Individual Differences, 44(5), 1203–11.

- Baron-Cohen S. (2009). Autism: the empathizing-systemizing (E-S) theory. Annals of the New York Academy of Sciences, 1156, 68–80.

- Beaucousin V., Zago L., Hervé P.Y., Strelnikov K., Crivello F. (2011). Sex-dependent modulation of activity in the neural networks engaged during emotional speech comprehension. Brain Research, 1390, 108–17.

- Belin P., Fillion-Bilodeau S., Gosselin F. (2008). The Montreal Affective Voices: a validated set of nonverbal affect bursts for research on auditory affective processing. Behavior Research Methods, 40(2), 531–9.

- Bentin S., Allison T., Puce A., Perez E., McCarthy G. (1996). Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience, 8(6), 551–65.

- Bernardis P., Salillas E., Caramelli N. (2008). Behavioural and neurophysiological evidence of semantic interaction between iconic gestures and words. Cognitive in Neuropsychology, 25 (7–8), 1114–28.

- Burdwood E.N., Simons R.F. (2016). Pay attention to me! Late ERPs reveal gender differences in attention allocated to romantic partners. Psychophysiology, 53(4), 436–43.

- Caharel S., d’Arripe O., Ramon M., Jacques C., Rossion B. (2009). Early adaptation to repeated unfamiliar faces across viewpoint changes in the right hemisphere: evidence from the N170 ERP component. Neuropsychologia, 47(3), 639–43.

- Canessa N., Alemanno F., Riva F., et al (2012). The neural bases of social intention understanding: the role of interaction goals. PLoS One, 7(7), e42347.

- Cárdenas R.A., Harris L.J., Becker M.W. (2013). Sex differences in visual attention toward infant faces. Evolution and Human Behavior, 34 (4), 280–7.

- Carter E.J., Hodgins J.K., Rakison D.H. (2011). Exploring the neural correlates of goal-directed action and intention understanding. Neuroimage, 54(2), 1634–42.

- Collignon O., Girard S., Gosselin F., Saint-Amour D., Lepore F., Lassonde M. (2010). Women process multisensory emotion expressions more efficiently than men. Neuropsychologia, 48(1), 220–5.

- Dalton M.K., Nacewicz B.M., Johnstone T., et al (2005). Gaze fixation and the neural circuitry of face processing in autism. National Neuroscience, 8(4), 519–26.

- Dapretto M., Davies M.S., Pfeifer J.H., et al (2006). Understanding emotions in others: mirror neuron dysfunction in children with autism spectrum disorders. Nature of Neuroscience, 9(1), 28–30.

- De Gelder B., Hadjikhani N. (2006). Non-conscious recognition of emotional body language. Neuroreport, 17(6), 583–6.

- De Gelder B., Snyder J., Greve D., Gerard G., Hadjikhani N. (2004). Fear fosters flight: a mechanism for fear contagion when perceiving emotion expressed by a whole body. Proceddings of the National Academy of Sciences of the United States of America, 101(47), 16701–6.

- De Gelder B., de Borst A.W., Watson R. (2015). The perception of emotion in body expressions. Wiley Interdisciplinary Reviews: Cognitive Sciences, 6(2), 149–58.

- Dien J., Spencer K.M., Donchin E. (2004). Parsing the late positive complex: mental chronometry and the ERP components that inhabit the neighborhood of the P300. Psychophysiology, 41(5), 665–78.

- Downing P.E., Jiang Y., Shuman M., Kanwisher N. (2001). A cortical area selective for visual processing of the human body. Science, 293(5539), 2470–3.

- Eimer M. (2010). The face-sensitive N170 component of the event-related brain potential. In: Rhodes G., Calder A., Johnson M., Haxby J.V., editors. The Oxford Handbook of Face Perception. USA: Oxford University Press.

- Fogassi L., Ferrari P.F., Gesierich B., Rozzi S., Chersi F., Rizzolatti G. (2005). Parietal lobe: from action organization to intention understanding. Science, 308(5722), 662–7.

- Gallese V., Keysers C., Rizzolatti G. (2004). A unifying view of the basis of social cognition. Trends in Cognitive Sciences, 8(9), 396–403.

- Gazzola V., Keysers C. (2009). The observation and execution of actions share motor and somatosensory voxels in all tested subjects: single-subject analyses of unsmoothed fMRI data. Cerebral Cortex, 19(6), 1239–55.

- Glocker M.L., Langleben D.D., Ruparel K., Loughead J.W., Gur R.C., Sachser N. (2009). Baby schema in infant faces induces cuteness perception and motivation for caretaking in adults. Ethology, 115(3), 257–63.

- Godard O., Leleu A., Rebaï M., Fiori N. (2013). Sex differences in interhemispheric communication during face identity encoding: evidence from ERPs. Neuroscience Research, 76(1–2), 58–66.

- Golarai G., Grill-Spector K., Reiss A.L. (2006). Autism and the development of face processing. Clinical Neuroscience Research, 6(3), 145–60.

- Goldberg H., Christensen A., Flash T., Giese M.A., Malach R. (2015). Brain activity correlates with emotional perception induced by dynamic avatars. Neuroimage, 122(122), 306–17.

- Grafton S.T., Hamilton A.F. (2007). Evidence for a distributed hierarchy of action representation in the brain. Human Movement Science, 26(4), 590–616.

- Grèzes J., Frith C., Passingham R.E. (2004). Brain mechanisms for inferring deceit in the actions of others. Journal of Neuroscience, 24(24), 5500–5.

- Gunter T.C., Bach P. (2004). Communicating hands: eRPs elicited by meaningful symbolic hand postures. Neuroscience Letters, 372(1–2), 52–6.

- Hadjikhani N., Joseph R.M., Snyder J., Tager-Flusberg H. (2007). Abnormal activation of the social brain during face perception in autism. Human Brain Mapping, 28(5), 441–9.

- Hahn A.C., Perrett D.I. (2014). Neural and behavioral responses to attractiveness in adult and infant faces. Neuroscience and Biobehavioral Reviews, 46(4), 591–603.

- Hall J.K., Hutton S.B., Morgan M.J. (2010). Sex differences in scanning faces: does attention to the eyes explain female superiority in facial expression recognition?Cognition & Emotion, 24(4), 629–37.

- Hamilton A.F., Grafton S.T. (2008). Action outcomes are represented in human inferior frontoparietal cortex. Cerebral Cortex, 18(5), 1160–8.

- Holle H., Gunter T.C., Rüschemeyer S.A., Hennenlotter A., Iacoboni M. (2008). Neural correlates of the processing of co-speech gestures. Neuroimage, 39(4), 2010–24.

- Iacoboni M., Lieberman M.D., Knowlton B.J., et al (2004). Watching social interactions produces dorsomedial prefrontal and medial parietal BOLD fMRI signal increases compared to a resting baseline. NeuroImage, 21(3), 1167–73.

- Just M.A., Keller T.A., Malave V.L., Kana R.K., Varmac S. (2012). Autism as a neural systems disorder: a theory of frontal-posterior underconnectivity. Neuroscience and Biobehavioral Reviews, 36(4), 1292–313.

- Kana R.K., Travers B.G. (2012). Neural substrates of interpreting actions and emotions from body postures. Social Cognitive and Affective Neuroscience, 7(4), 446–56.

- Kanwisher N., McDermott J., Chun M. (1997). The fusiform face area: a module in human extrastriate cortex specialized for face perception. Journal of Neuroscience, 17(11), 4302–11.

- Kim S.Y., Choi U.S., Park S.Y., et al (2015). Abnormal activation of the social brain network in children with autism spectrum disorder: an FMRI study. Psychiatry Investigation, 12(1), 37–45.

- Kleinhans N.M., Richards T., Sterling L., et al (2008). Abnormal functional connectivity in autism spectrum disorders during face processing. Brain, 131(4), 1000–12.

- Kret M.E., de Gelder B. (2013). When a smile becomes a fist: the perception of facial and bodily expressions of emotion in violent offenders. Experimental Brain Research, 228(4), 399–410.

- Krombholz A., Schaefer F., Boucsein W. (2007). Modification of N170 by different emotional expression of schematic faces. Biological Psychology, 76(3), 156–62.

- Lachnit H., Pieper W. (1990). Speed and accuracy effects of fingers and dexterity in 5-choice reaction tasks. Ergonomics, 33(12), 1443–54.

- Leung R.C., Pang E.W., Cassel D., Brian J.A., Smith M.L., Taylor M. J. (2015). Early neural activation during facial affect processing in adolescents with Autism Spectrum Disorder. Neuroimage: Clinical, 7, 203–12.

- Libero L.E., Stevens C.E. Jr., Kana R.K. (2014). Attribution of emotions to body postures: an independent component analysis study of functional connectivity in autism. Human Brain Mapping, 35(10), 5204–18.

- Maximo J.O., Cadena E.J., Kana R.K. (2014). The implications of brain connectivity in the neuropsychology of autism. Neuropsychological Review, 24(1), 16–31.

- Meeren H.K.M., van Heijnsbergen C.C., de Gelder B. (2005). Rapid perceptual integration of facial expression and emotional body language. Proceedigns of the National Academy of Sciences of the United States of America, 102(45), 16518–23.

- Molnar-Szakacs I., Kaplan J., Greenfield P.M., Iacoboni M. (2006). Observing complex action sequences: the role of the fronto-parietal mirror neuron system. NeuroImage, 33(3), 923–35.

- Oostenveld R., Praamstra P. (2001). The five percent electrode system for high-resolution EEG and ERP measurements. Clinical Neurophysiology, 112(4), 713.

- Palmero-Soler E., Dolan K., Hadamschek V., Tass P.A. (2007). SwLORETA: a novel approach to robust source localization and synchronization tomography. Physics in Medicine and Biology, 52(7), 1783–800.

- Pascual-Marqui R.D. (2002). Standardized low resolution brain electromagnetic tomography (sLORETA): technical details. Methods and Findings in Experimental and Clinical. Pharmacology, 24D, 5–12.

- Pavlova M., Guerreschi M., Lutzenberger W., Sokolov A.N., Krägeloh-Mann I. (2010). Cortical response to social interaction is affected by gender. Neuroimage, 50(3), 1327–32.

- Peelen M.V., Downing P.E. (2005). Selectivity for the human body in the fusiform gyrus. Journal of Neurophysiology, 93(1), 603–8.

- Pinkham A.E., Hopfinger J.B., Pelphrey K.A., Piven J., Penn D.L. (2008). Neural bases for impaired social cognition in schizophrenia and autism spectrum disorders. Schizophrenia Research, 99(1–3), 164–75.

- Proverbio A.M. (2017). Sex differences in social cognition: the case of face processing. Journal of Neuroscience Research, 95(1–2), 222–34.

- Proverbio A.M., Adorni R., Zani A., Trestianu L. (2009). Sex differences in the brain response to affective scenes with or without humans. Neuropsychologia, 47(12), 2374–88.

- Proverbio A.M., Brignone V., Matarazzo S., Del Zotto M., Zani A. (2006a). Gender and parental status affect the visual cortical response to infant facial expression. Neuropsychologia, 44(14), 2987–99.

- Proverbio A.M., Brignone V., Matarazzo S., Del Zotto M., Zani A. (2006b). Gender differences in hemispheric asymmetry for face processing. BMC Neuroscience, 7(1), 44.

- Proverbio A.M., Calbi M., Manfredi M., Zani A. (2014). Comprehending body language and mimics: an ERP and neuroimaging study on Italian actors and viewers. PLoS One, 9(3), e91294.

- Proverbio A.M., Crotti N., Manfredi M., Adorni R., Zani A. (2012a). Who needs a referee? How incorrect basketball action are automatically detected by basketball players’ brain. Science Report, 2(1), 883.

- Proverbio A.M., Gabaro V., Orlandi A., Zani A. (2015). Semantic brain areas are involved in gesture comprehension: an electrical neuroimaging study. Brain Language, 147, 30–40.

- Proverbio A.M., Galli J. (2016). Women are better at seeing faces where there are none: an ERP study of face pareidolia. Social Cognitive and Affective Neuroscience, 11(9), 1501–12.

- Proverbio A.M., Matarazzo S., Brignone V., Del Zotto M., Zani A. (2007). Processing valence and intensity of infant expressions: the roles of expertise and gender. Scandinavian Journal of Psychology, 48(6), 477–85.

- Proverbio A.M., Mazzara R., Riva F., Manfredi M. (2012b). Sex differences in callosal transfer and hemispheric specialization for face coding. Neuropsychologia, 50(9), 2325–32.

- Proverbio A.M., Riva F. (2009). RP and N400ERP components reflect semantic violations in visual processing of human actions. Neuroscience Letters, 459(3), 142–6.

- Proverbio A.M., Riva F., Zani A. (2010a). When neurons do not mirror the agent’s intentions: sex difference in neural coding of goal-directed actions. Neuropsychologia, 48(5), 1454–63.

- Proverbio A.M., Riva F., Martin E., Zani A. (2010b). Face coding is bilateral in the female brain. PLoS One, 5(6), e11242.

- Proverbio A.M., Riva F., Paganelli L., et al (2011a). Neural coding of cooperative vs. affective human interactions: 150 ms to code the action’s purpose. PLoS One, 6(7), e22026.

- Proverbio A.M., Riva F., Zani A., Martin E. (2011b). Is it a baby? Perceived age affects brain processing of faces differently in women and men. Journal of Cognitive Neuroscience, 23(11), 3197–208.

- Redcay E., Dodell-Feder D., Mavros P.L., et al (2013). Atypical brain activation patterns during a face-to-face joint attention game in adults with autism spectrum disorder. Human Brain Mapping, 34(10), 2511–23.

- Rehnman J., Herlitz A. (2007). Women remember more faces than men do. Acta Psychologica124(3), 344–55.

- Reid V.M., Striano T. (2008). N400 involvement in the processing of action sequences. Neuroscience Letters, 433(2), 93–7.

- Rizzolatti G., Fogassi L., Gallese V. (2001). Neurophysiological mechanisms underlying the understanding and imitation of action. Neuroscience, 2(9), 661–70.

- Rossion B., Schiltz C., Crommelinck M. (2003). The functionally defined right occipital and fusiform “face areas” discriminate novel from visually familiar faces. Neuroimage, 19(3), 877–83.

- Ruby P., Decety J. (2003). What you believe versus what you think they believe: a neuroimaging study of conceptual perspective-taking. European Journal of Neuroscience, 17(11), 2475–80.

- Sadeh B., Podlipsky I., Zhdanov A., Yovel G. (2010). Event-related potential and functional MRI measures of face-selectivity are highly correlated: a simultaneous ERP-fMRI investigation. Human Brain Mapping, 31(10), 1490–501.

- Sagiv N., Bentin S. (2001). Structural encoding of human and schematic faces: holistic and part-based processes. Journal of Cognitive Neuroscience, 13(7), 937–51.

- Saxe R., Kanwisher N. (2003). People thinking about thinking people: the role of the temporo-parietal junction in “theory of mind”. Neuroimage, 19(4), 1835–42.

- Schwarzlose R.F., Baker C.I., Kanwisher N. (2005). Separate face and body selectivity on the fusiform gyrus. Journal of Neuroscience, 25(47), 11055–159.

- Shibata H., Gyoba J., Suzuki Y. (2009). Event-related potentials during the evaluation of the appropriateness of cooperative actions. Neuroscience Letters, 452(2), 189–93.

- Sinke C., Sorger B., Goebel R., de Gelder B. (2010). Tease or threat? Judging social interactions from bodily expressions. Neuroimage, 49(2), 1717–27.

- Sitnikova T., Kuperberg G., Holcomb P.J. (2003). Semantic integration in videos of real-world events: an electrophysiological investigation. Psychochysiology, 40(1), 160–4.

- Slaughter V., Stone V.E., Reed C. (2004). Perception of faces and bodies similar or different?Current Directions in Psychological Science, 13(6), 219–23.

- Snedecor G.W., Cochran W.G. (1989). Statistical Methods, 8th edn.Ames, Iowa: Iowa State University Press.

- Sokolov A.A., Krüger S., Enck P., Krägeloh-Mann I., Pavlova M.A. (2011). Gender affects body language reading. Frontiers in Psychology, 2, 16.

- Sun Y., Gao X., Han S. (2010). Sex differences in face gender recognition: an event-related potential study. Brain Research, 1327, 69–76.

- Tanabe H.C., Kosaka H., Saito D.N., et al (2012). Hard to “tune in”: neural mechanisms of live face-to-face interaction with high-functioning autistic spectrum disorder. Frontiers in Human Neuroscience, 6(6), 268.

- Tanaka J.W., Sung A. (2016). The “eye avoidance” hypothesis of autism face processing. Journal of Autism and Developmental Disorders, 46(5), 1538–52.

- Tikhonov A.N. (1963). Solution of incorrectly formulated problems and the regularization method. Doklady Akademii Nauk SSSR, 151, 501–4.

- Tiedt H.O., Weber J.E., Pauls A., Beier K.M., Lueschow A. (2013). Sex-differences of face coding: evidence from larger right hemispheric M170 in men and dipole source modelling. PLoS One, 8(7), e69107.

- Van den Stock J., Righart R., de Gelder B. (2007). Body expressions influence recognition of emotions in the face and voice. Emotion, 7(3), 487–94.

- Verleger R., Jaśkowski P., Wascher E. (2005). Evidence for an integrative role of P3b in linking reaction to perception. Journal of Psychophysiology, 19(3), 165–81.

- Wallace S., Coleman M., Bailey A. (2008). Face and object processing in autism spectrum disorders. Autism Research, 1(1), 43–51.

- Wang J., Kitayama S., Han S. (2011). Sex difference in the processing of task-relevant and task-irrelevant social information: an event-related potential study of familiar face recognition. Brain Research, 1408, 41–51.

- Wu Y.C., Coulson S. (2007). Iconic gestures prime related concepts: an ERP study. Psychonomic Bulletin and Review, 14 (1), 57–63.

- Wu Y.C., Coulson S. (2005). Meaningful gestures: electrophysiological indices of iconic gesture comprehension. Psychophysiology, 42 (6), 654–67.

- Yuanyuan G., Xiaoqin M., Yue-jia L., (2013). Do bodily expressions compete with facial expressions? Time course of integration of emotional signals from the face and the body. PLoS One, 8(7), e66762.